The most fun stuff about Dreamlands pirate boats are the sea shantaks

The Stochastic Game

Is Leng in Tibet, or the Dreamlands, or what? Nah, Leng is where the heart is.

By the way please don’t touch the heart. It’s still wet.

Very sad to see a bunch of idiots on the Willingdon ave highway bridge with Canadian flags and “mandate freedom” signs. Good thing is that there were only like two dozens of them, but still…

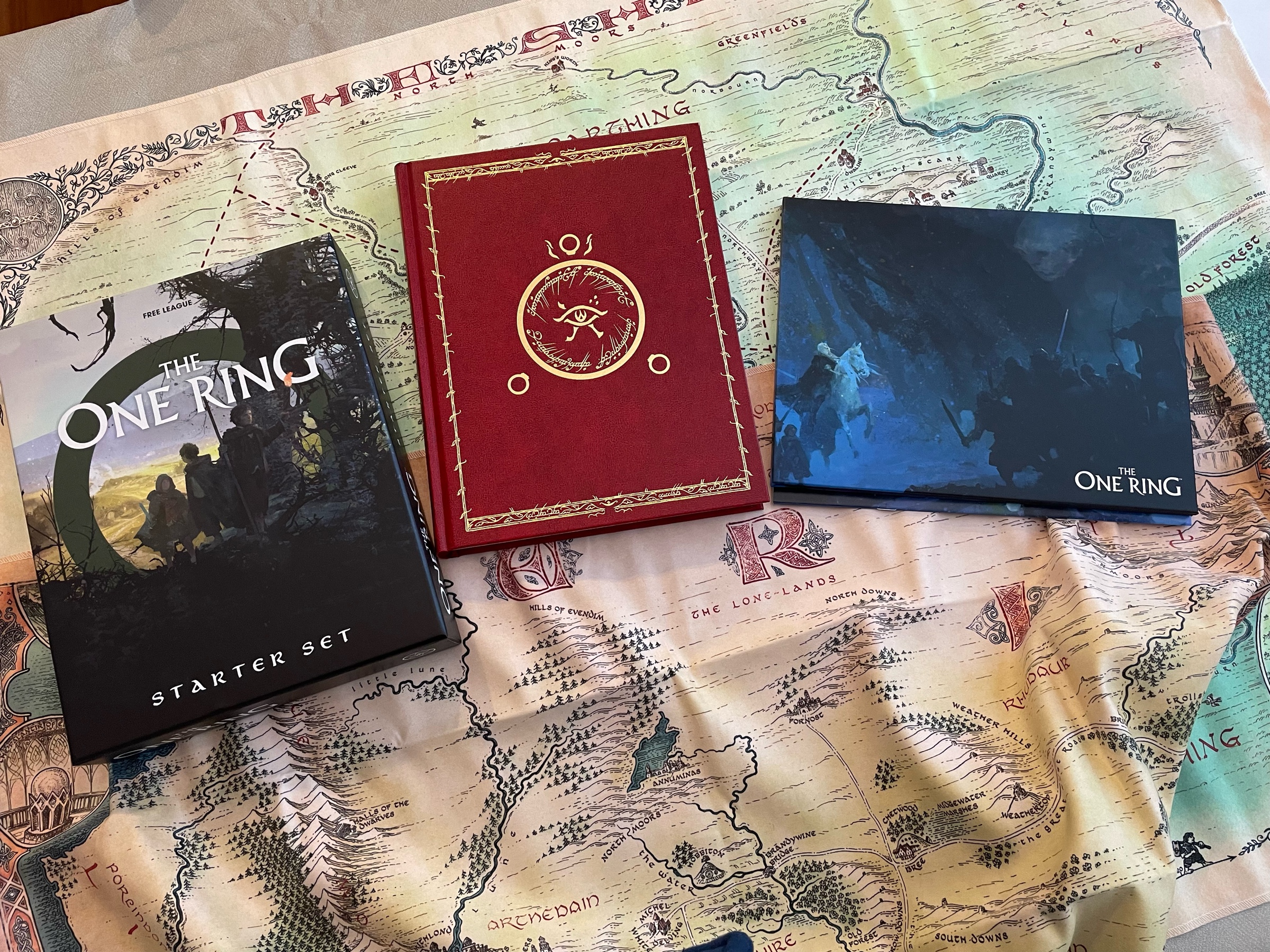

It looks like everybody received it weeks ago, but the Fellowship finally made it to western Canada!

You know you’ve got too many RPG PDFs when you have to reorganize game directories in alphabetical sub-directories

Or maybe scientists have it the wrong way! The Earth is flat but most of the time the light bends upwards towards the sky! #GloranthaLogic https://www.cbc.ca

“The garage is watered from the sprinklers. It also left a man’s decapitated body lying on the floor next to his own severed head. The head which, at this time, has no name.”

System doesn’t matter. As shown by my players who will just burn shit down and run away regardless of the system #ttrpg

Strike Ranks are RuneQuest’s THAC0

Excellent snow and weather conditions up here. And no wait at the ski lifts! Real moments of beauty when the sun rays pierce through the fog or light up snowflakes like thousands of fireflies