Good morning! I hope all you nerds currently at GenCon will stay safe and have a good time!

Good morning! I hope all you nerds currently at GenCon will stay safe and have a good time!

Pitch Meetings is, as always, very on point https://www.youtube.com

Saw Thor: Love and Thunder with the kid yesterday. Big “meh”. Some funny stuff, obviously, but lots of dubious creative choices, stuff that doesn’t make sense, and bits that fall flat. Bottom tier of my Marvel rankings.

Good morning everyone! Here’s today’s good boy.

And now, in the “crypto-bros are morons” category, the news you all saw coming months ago: https://web3isgoinggreat.com

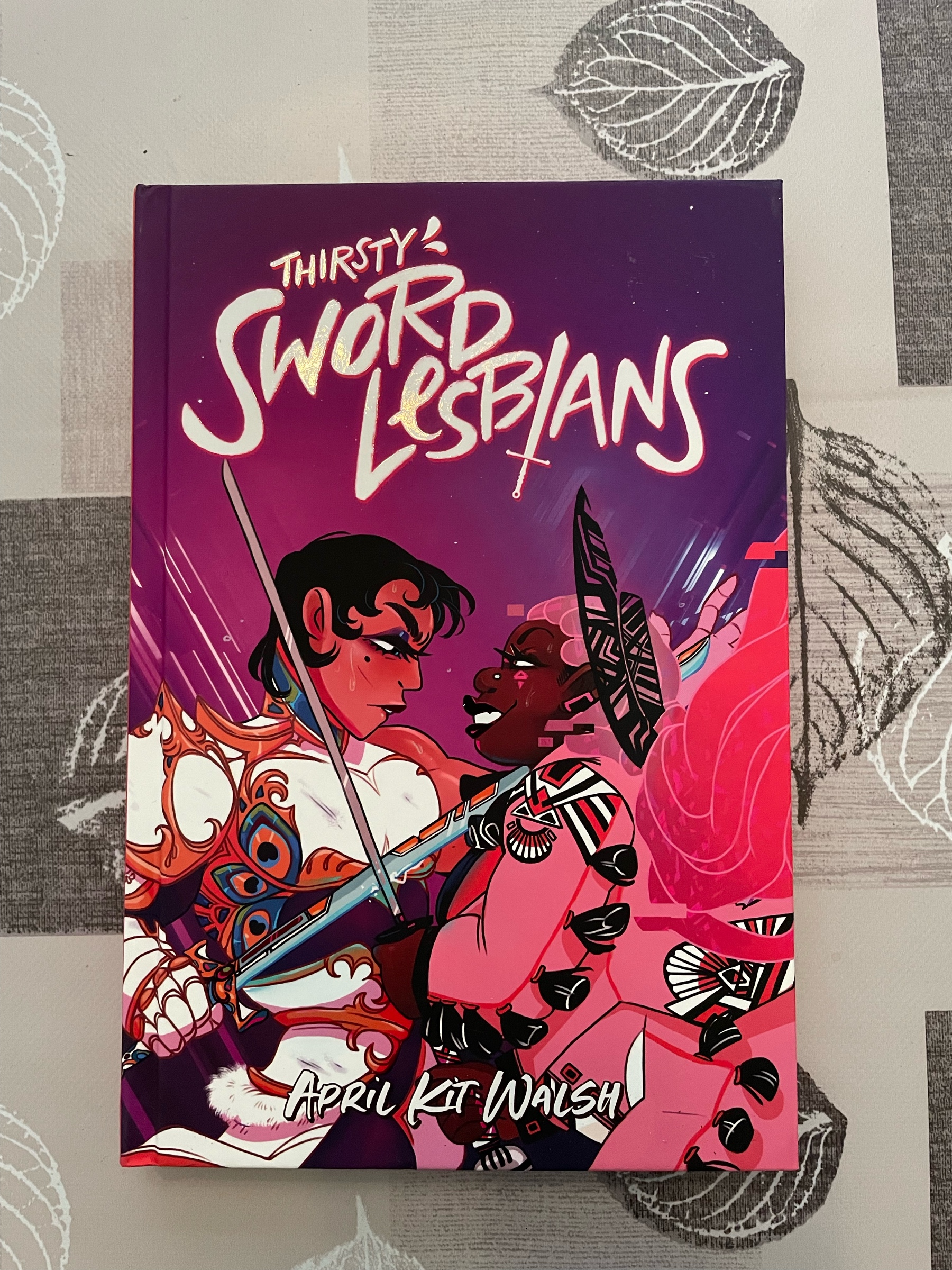

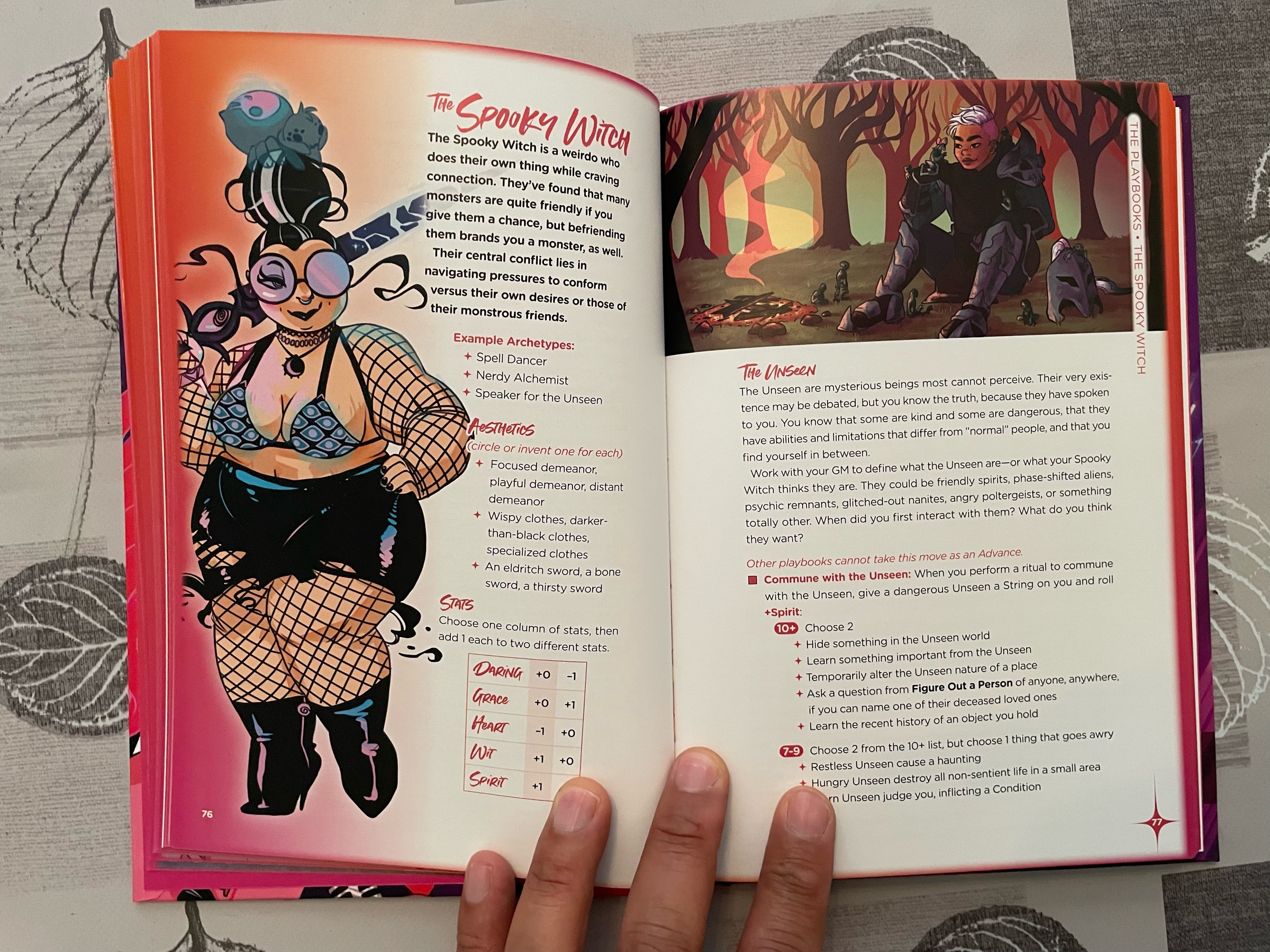

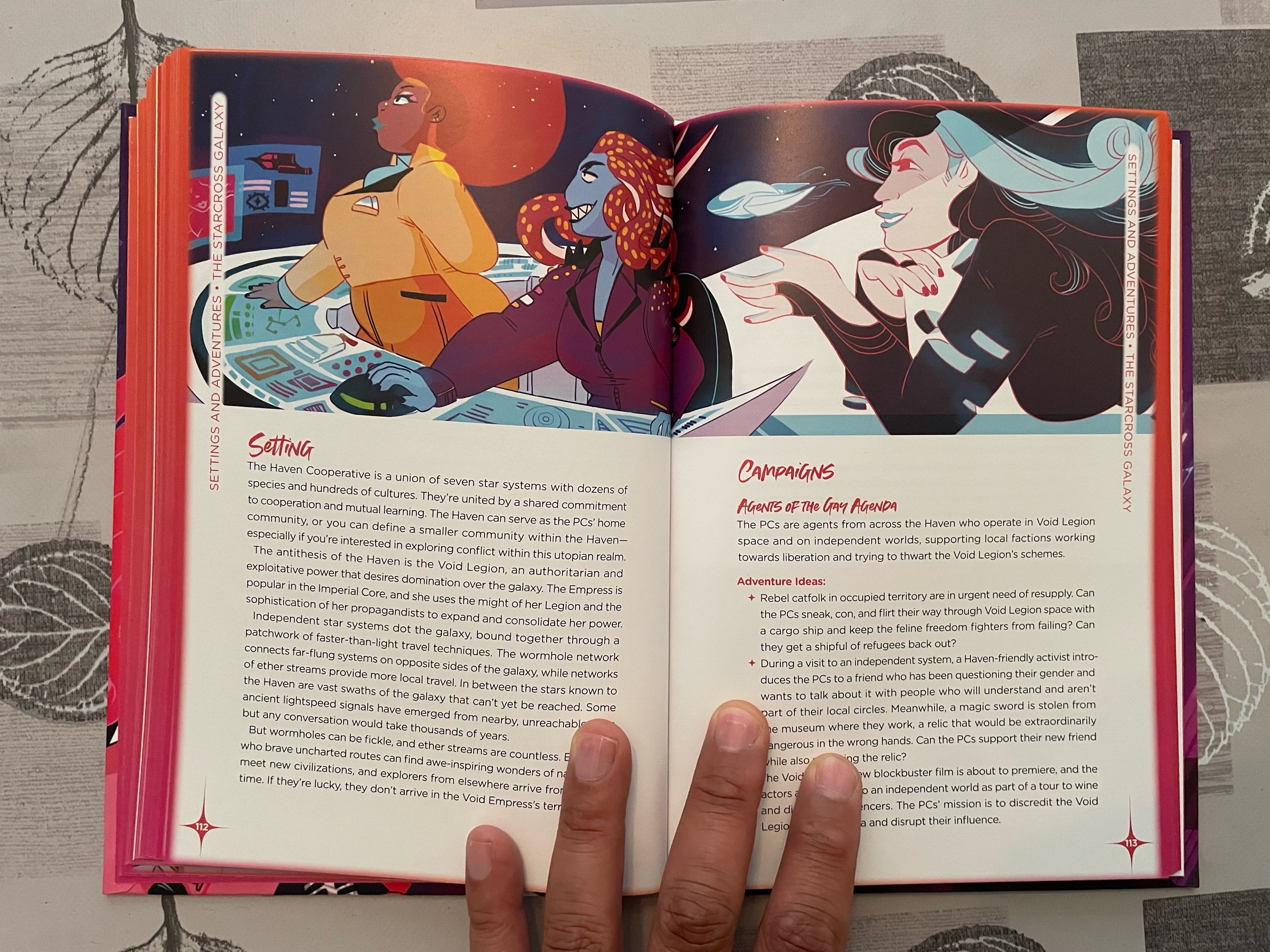

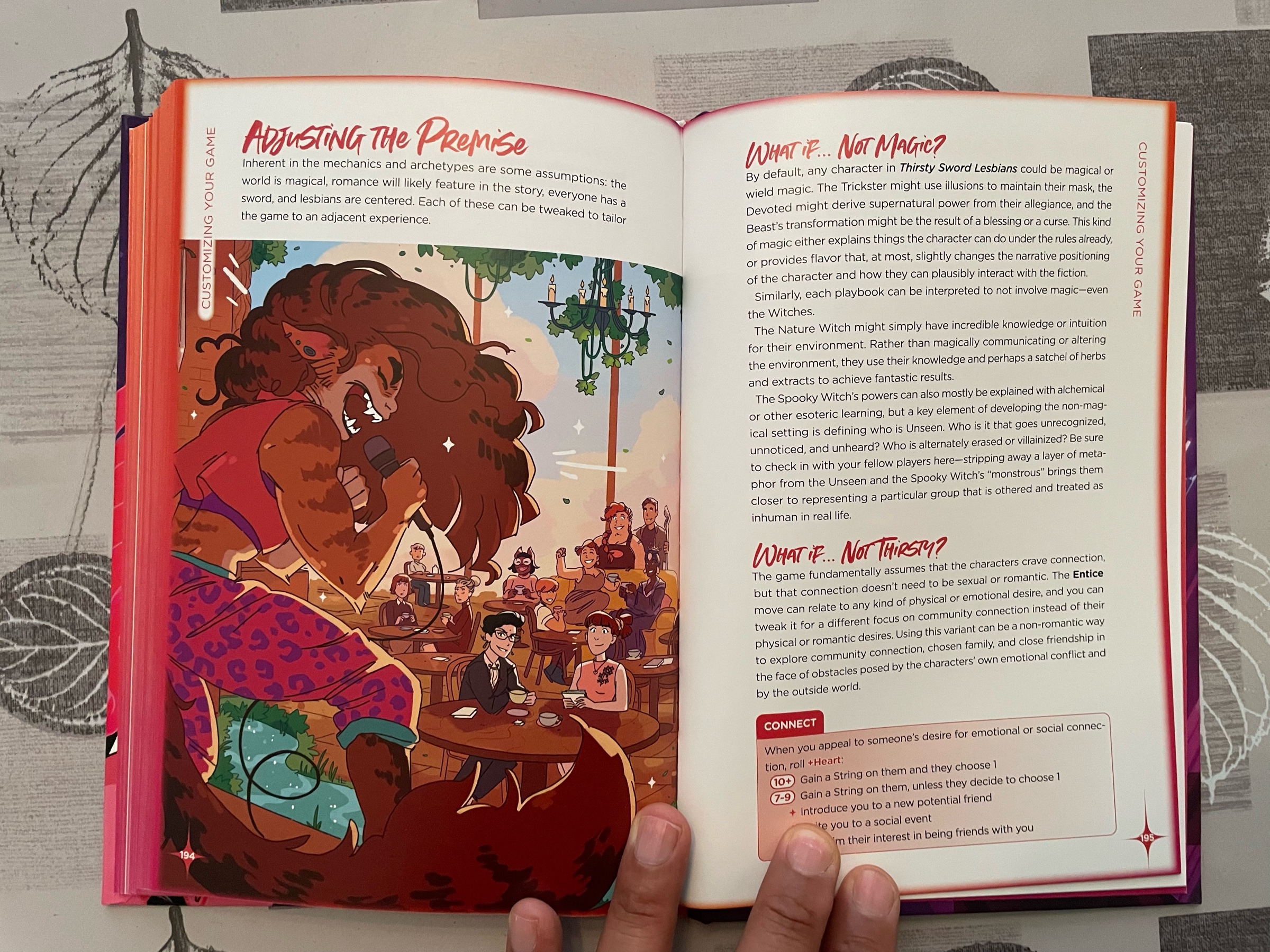

Check out these Thirsty Sword Lesbians!

Good morning! It’s going to be way too hot again here. But here’s a good boy for you.

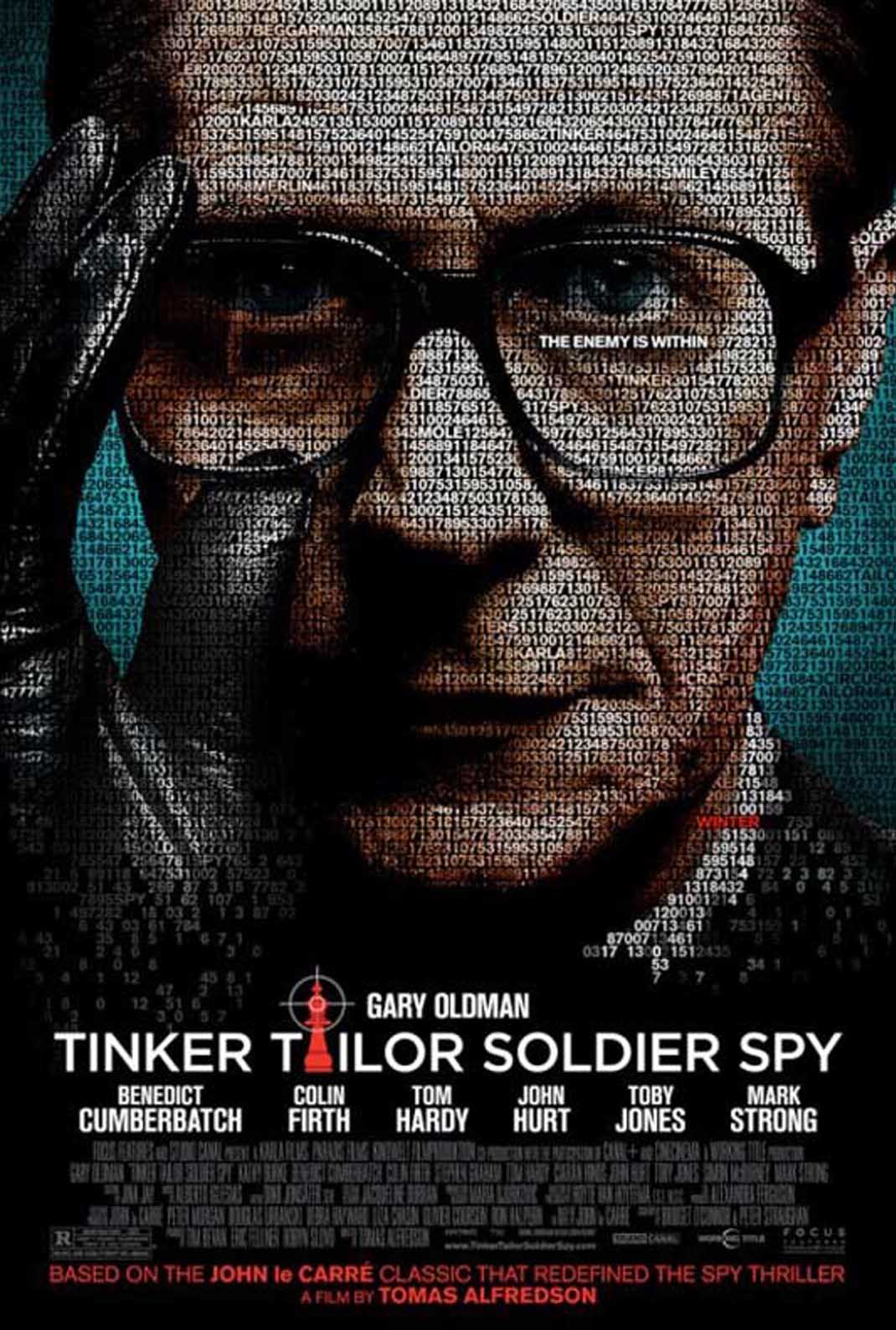

This was pretty good! Stellar cast, too.

The Sharp Objects miniseries was really good! But holy shit, the post credits scenes after the last episode are something else.

Good morning! Don’t forget to drink lots of water today!