Watched Tremors last night with the older kid. He said it’s not very scary, so it’s “less of a horror movie, and more of a thriller”.

The Stochastic Game

“Robin’s Laws of Good Game Mastering” is indeed the best GMing book around, if only because it’s super short and to the point. Recommended! http://www.sjgames.com

This is really good: “Just Because They’ve Turned Against Humanity Doesn’t Mean We Should Defund the Terminator Program” https://www.mcsweeneys.net

Treasures of Glorantha is out on the Jonstown Compendium! I did a couple of illustrations in there. The affiliate link is not for me, it’s for the author… enjoy and share! #Glorantha https://www.drivethrurpg.com

Looks like all interesting children books start with a 12 year old main character… What recommendations do you have for 8-11yo readers besides Harry Potter and Roald Dahl stories?

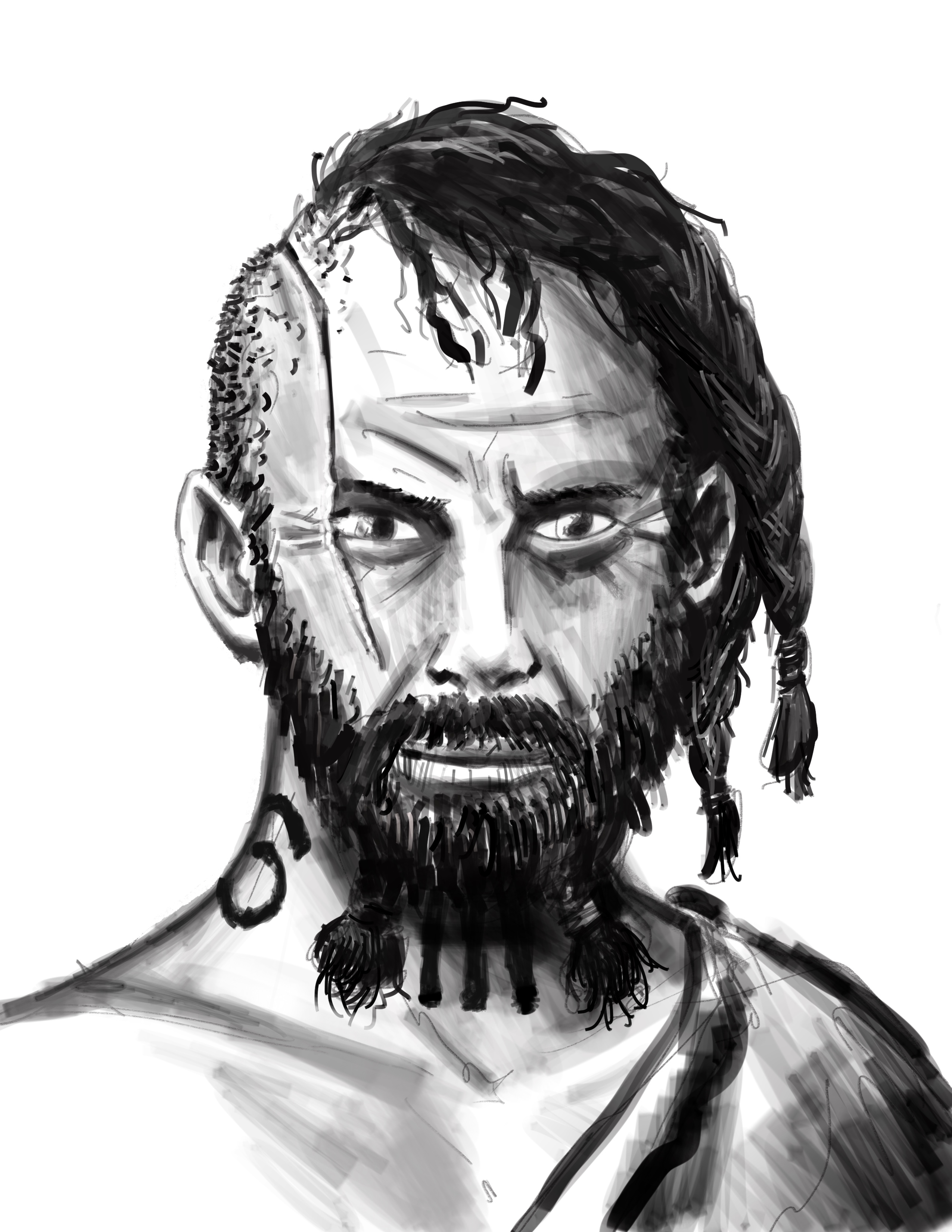

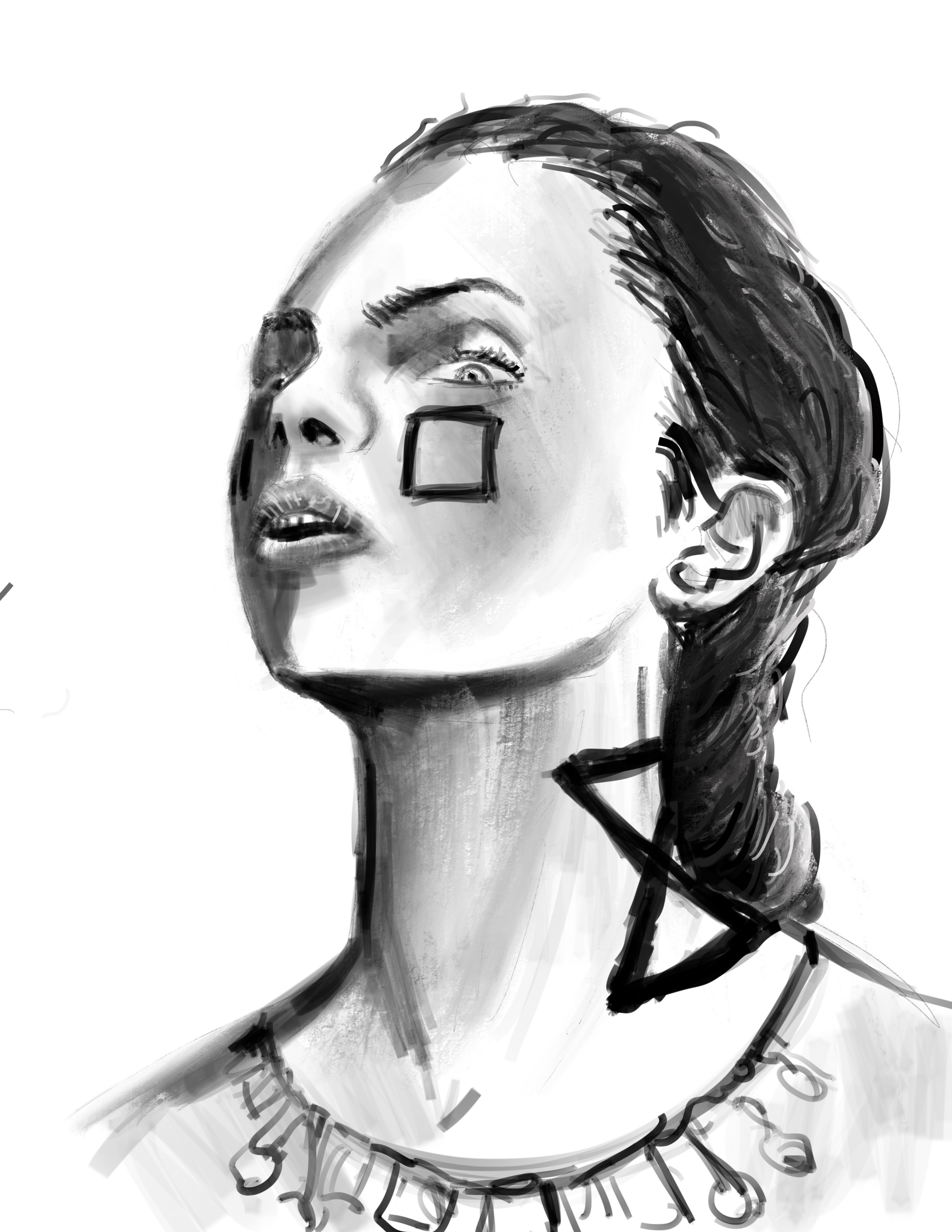

Warm-up sketches! Doing a few basic random #Glorantha clan people.

I’ve been playing the first Monkey Island (remastered) with the kids and… let’s say there’s been a lot of pirate insults thrown around lately. Also: they love speaking like Stan the salesman, especially the manic hand waving!

I am completely baffled by how the 13th Age rulebook assumes prior knowledge of D&D: it doesn’t list or explain character abilities! The only list is a short parenthetical (p30), and the only explanation is in the index entry (p310+).

I had a whole bunch of leftover cardboard boxes from all those RPG books I ordered recently… so I had to do something with them! What do YOU do with your cardboard? (and yes I need to add some Runes and decorations)

I found a stash of old unused stickers so I decided to decorate my old Linux laptop!