This was an appropriate T-shirt to wear this weekend.

This was an appropriate T-shirt to wear this weekend.

Here are some of my latest doodles… trying out a few different things…

The stupid limitations of iPadOS are reaching a point where I’m just gonna say “fuck it” and buy a Cintiq to continue doing further semi-serious illustration work… 😑😤💸

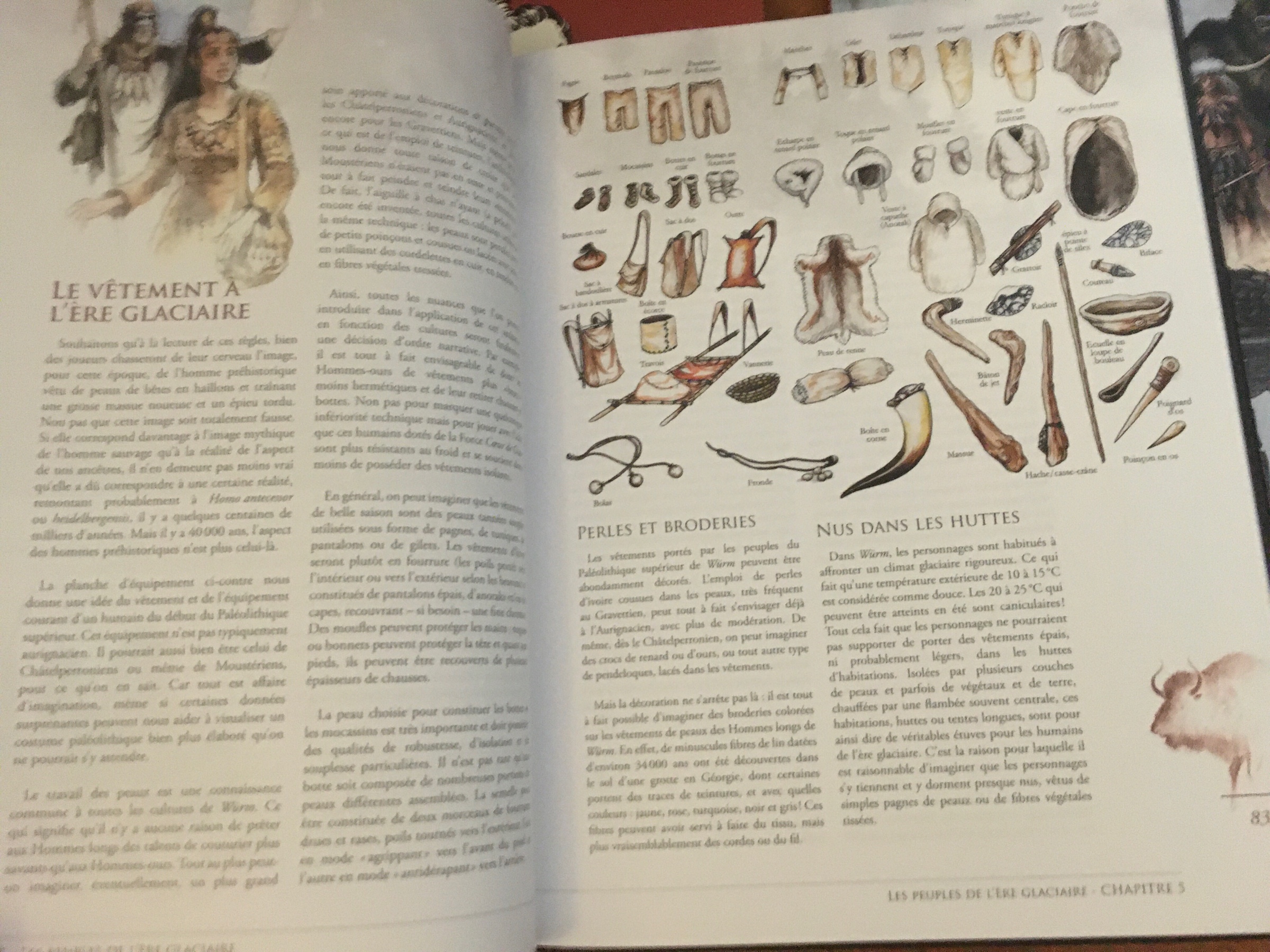

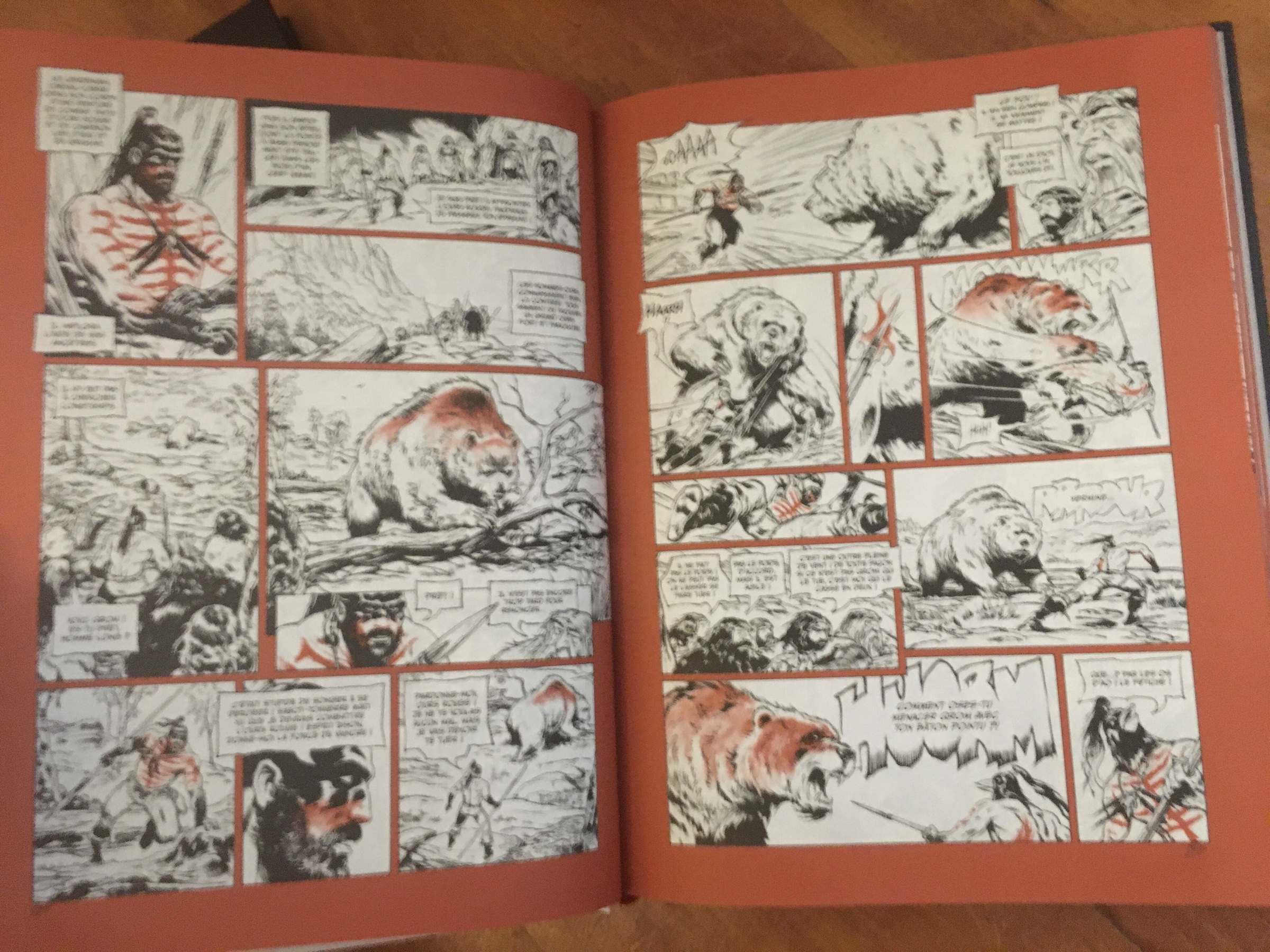

This thing arrived yesterday! Yes, it’s the brand new Würm 2nd edition, in French! And yes, there’s a comicbook too! I didn’t expect all this so soon…

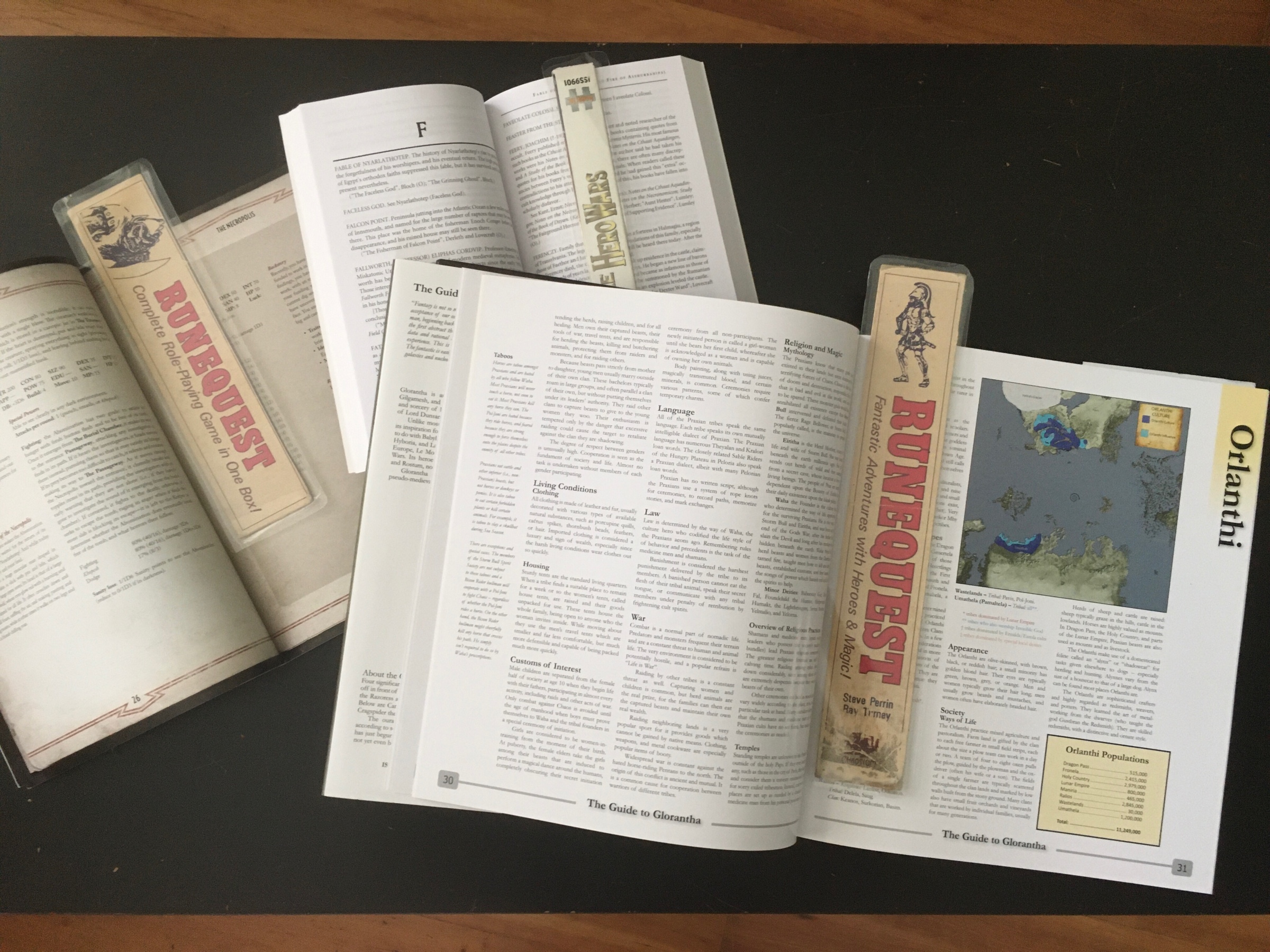

I had some leftovers from those RPG boxes I framed recently, so I started turning them into bookmarks… reading in style!

“The Parchments of Tam”, my Call of Cthulhu adventure for the Journal d’Indochine Kickstarter, has now passed 23k words… maybe I should have designed something a bit simpler for what might be my first published adventure 😅 #ttrpg

Wireless headphones: still a shitshow, where it’s actually more practical to own multiple headphones, each paired with one device. Remind me again how Apple “pushed the tech forward”? There’s been no progress.

I’m trying to (digitally) paint “for real”, as opposed to sketching/inking and colouring, and I’ve got no idea what I’m doing. It feels like vomiting colours until a couple of them look like something

Spark email has been out for, what, six years on iOS? And there’s still no server-side search support… 😢😤

And for the record, I’d love for my house (and all the others around it) to be worth half of what it’s worth now. I’d vote for someone who can make it happen.

And as a landlord myself: we landlords are mostly useless to society so yes, bring on more paperwork and taxes.