Good morning Burnaby! Nice spider webs you have everywhere here…

Good morning Burnaby! Nice spider webs you have everywhere here…

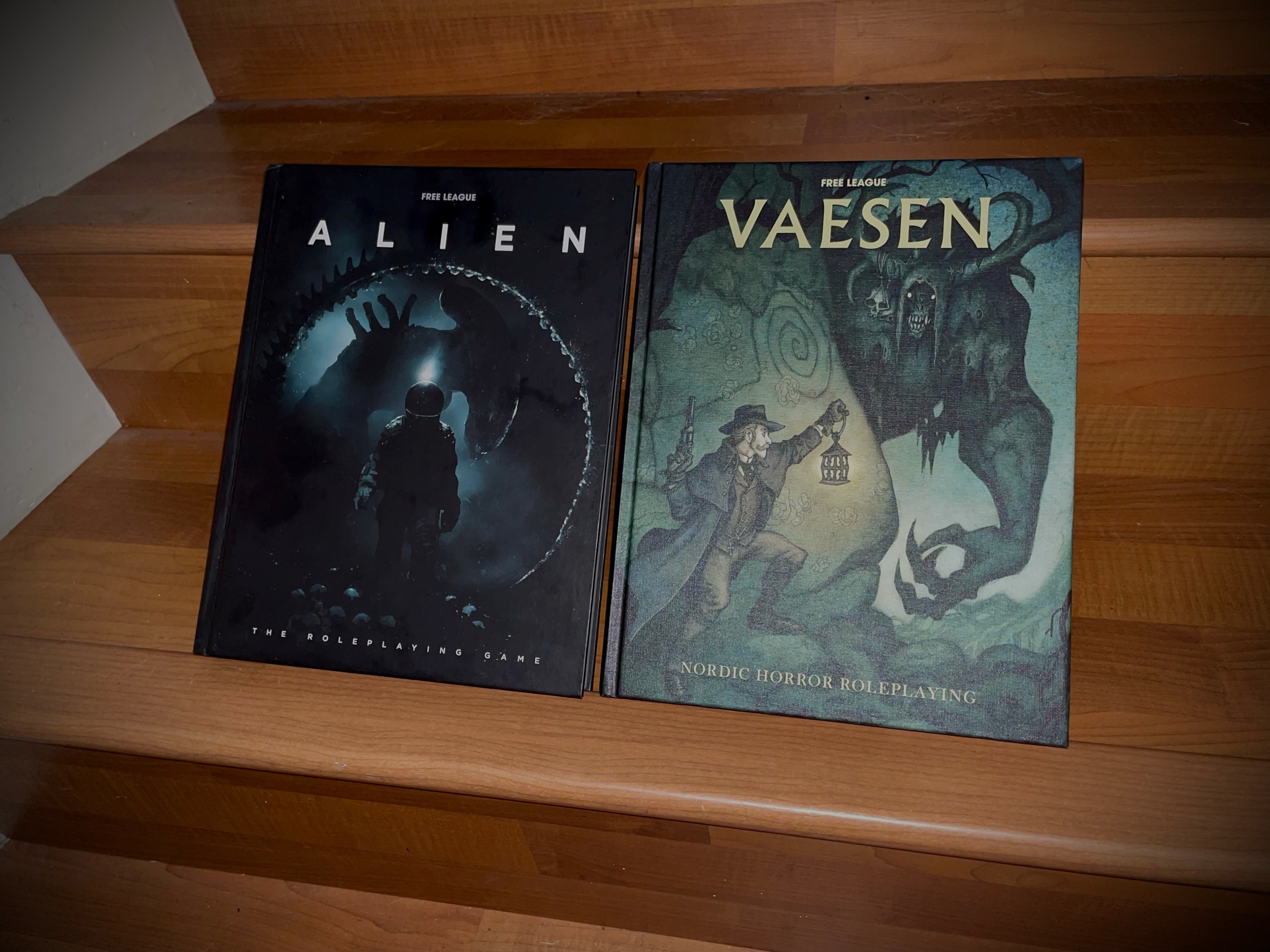

Congrats to Free League on all the ENNIE awards, especially the unsurprising but still well deserved fan favourite publisher award! Vaesen and Alien are great games, YZE is a great system, check them out!

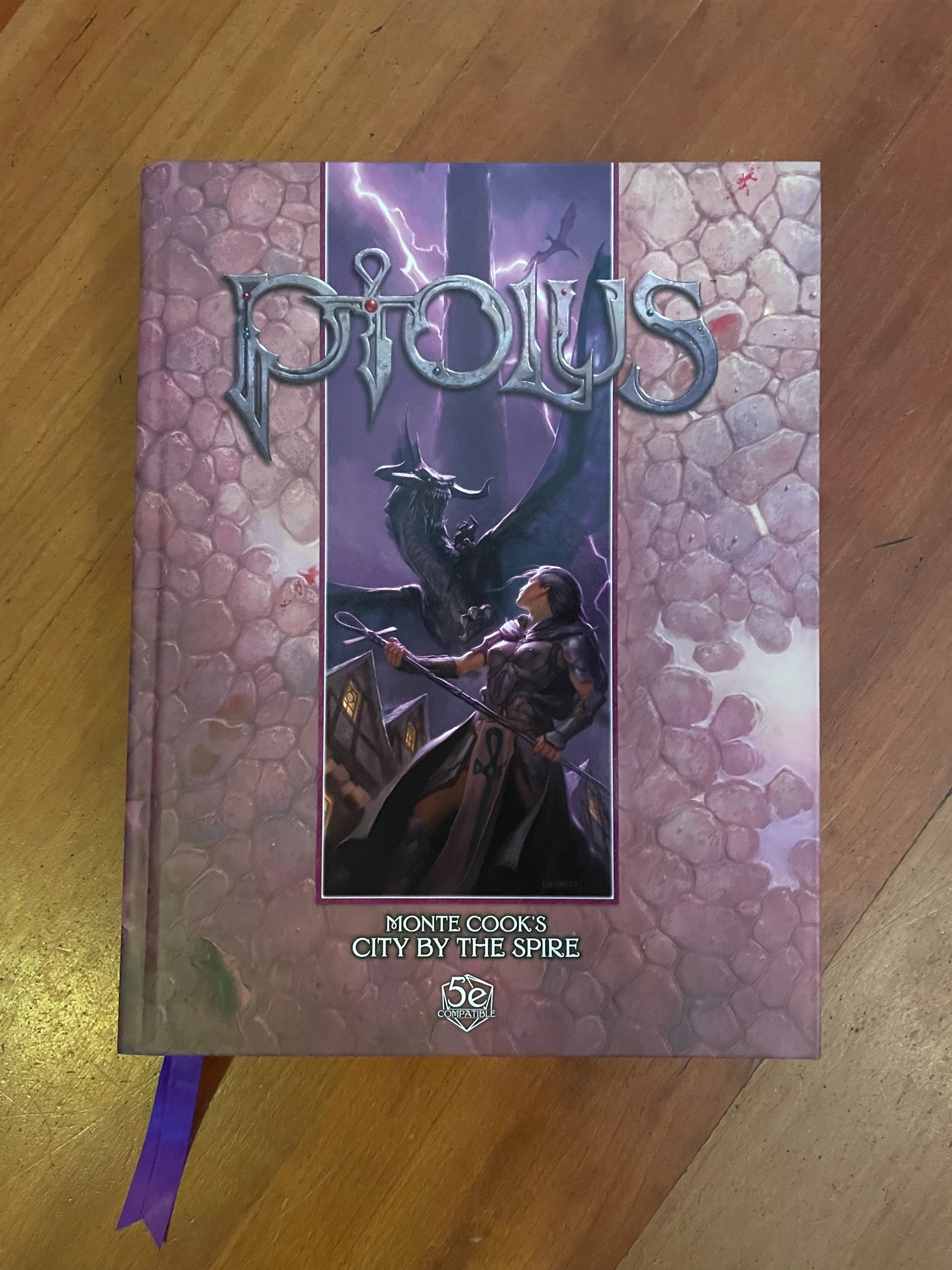

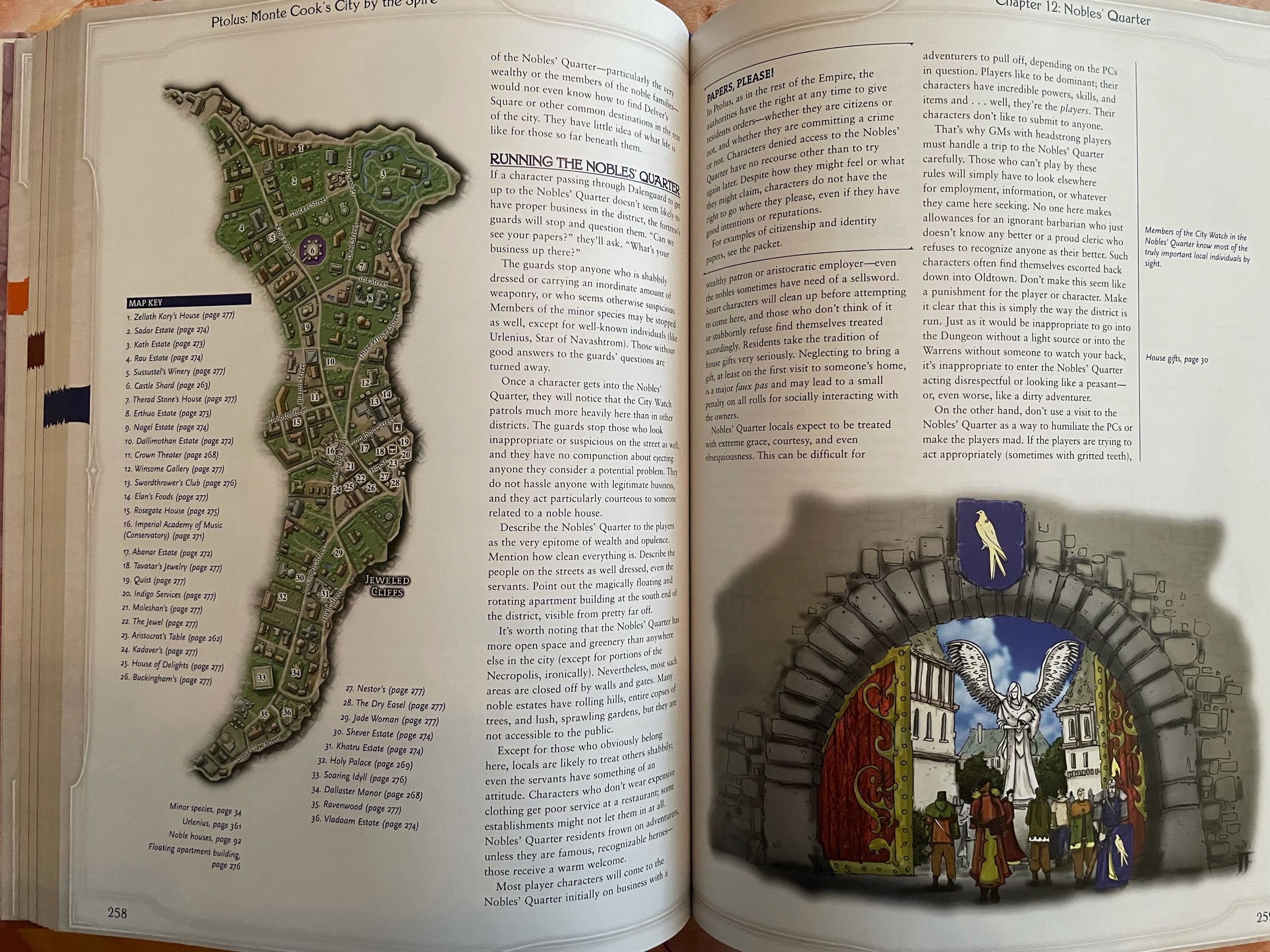

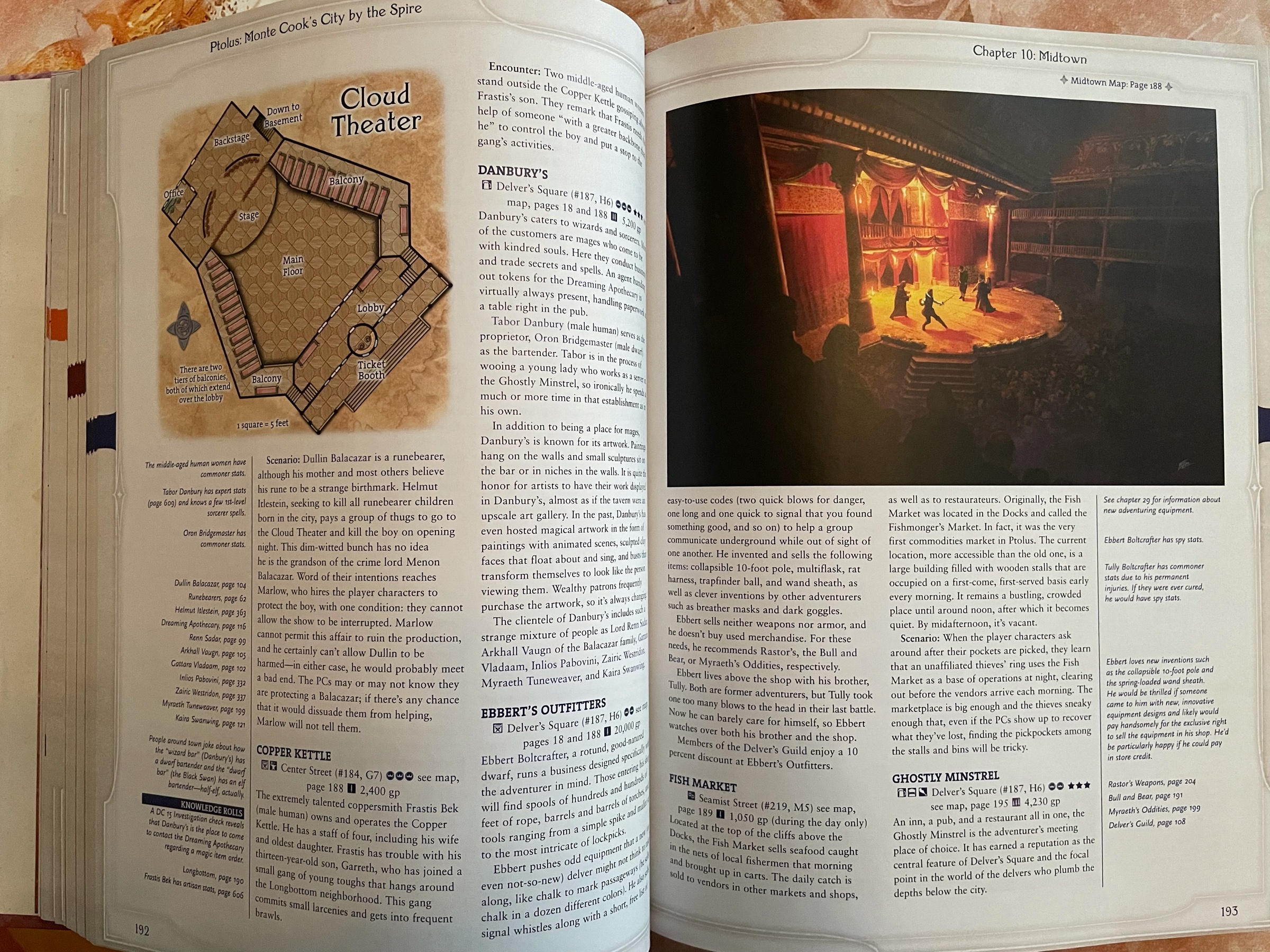

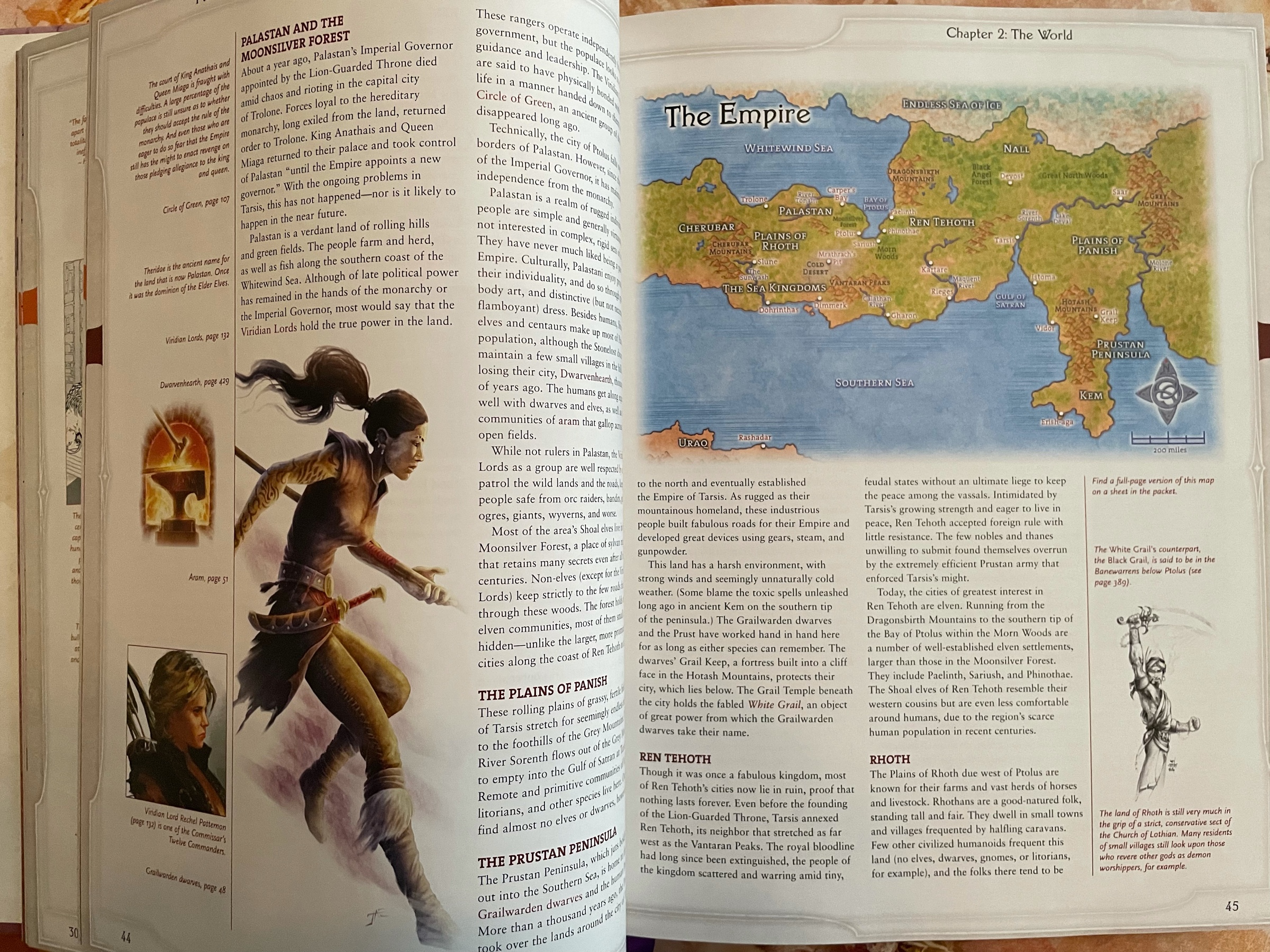

Ptolus is a fucking monster of a book. Seriously it’s huge. I don’t think I’ll ever play it as is, but I’ll definitely borrow ideas here and there! It’s full of them.

My, the new focus and cinematic features in the iPhone 13 camera look VERY interesting

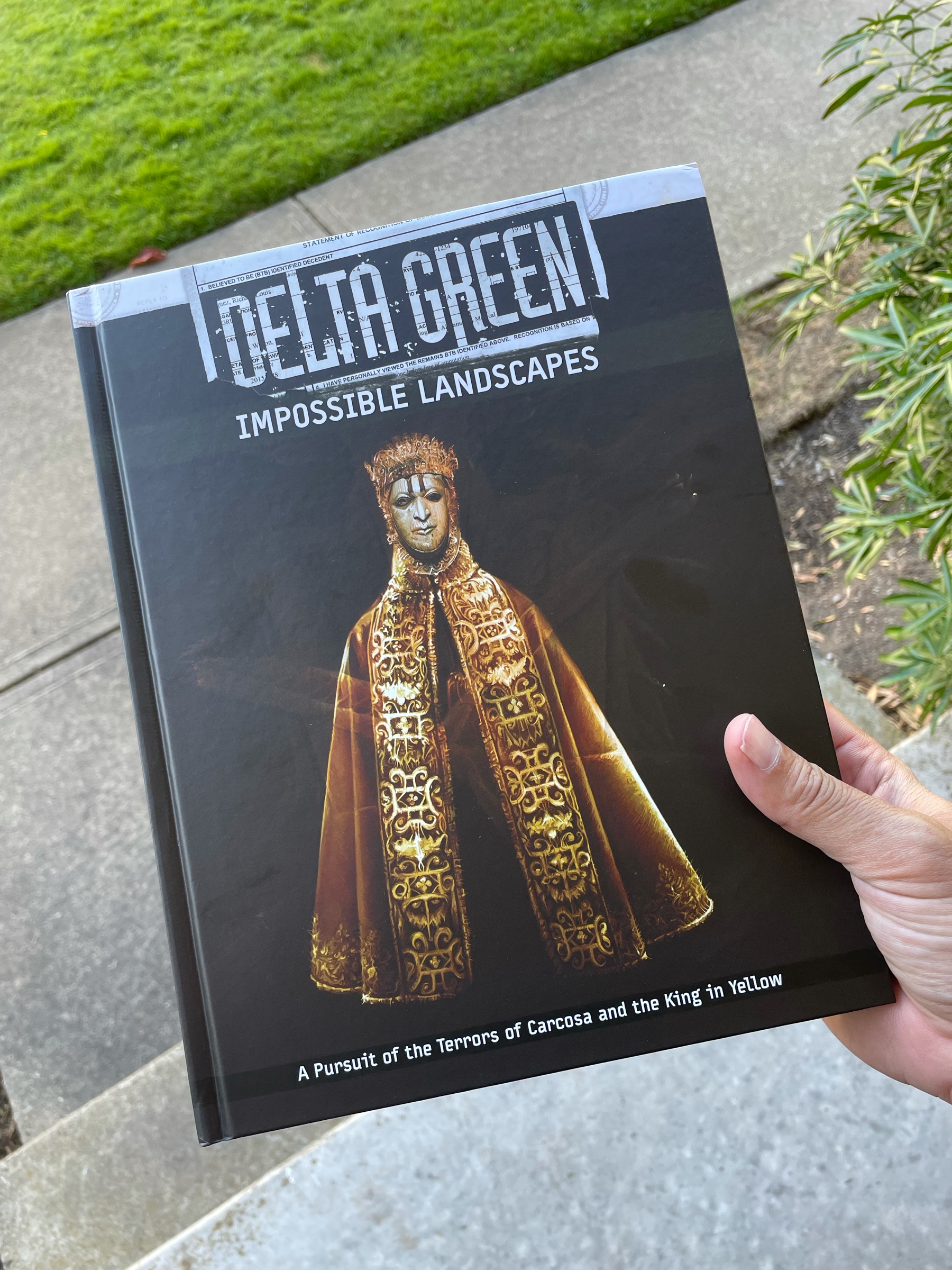

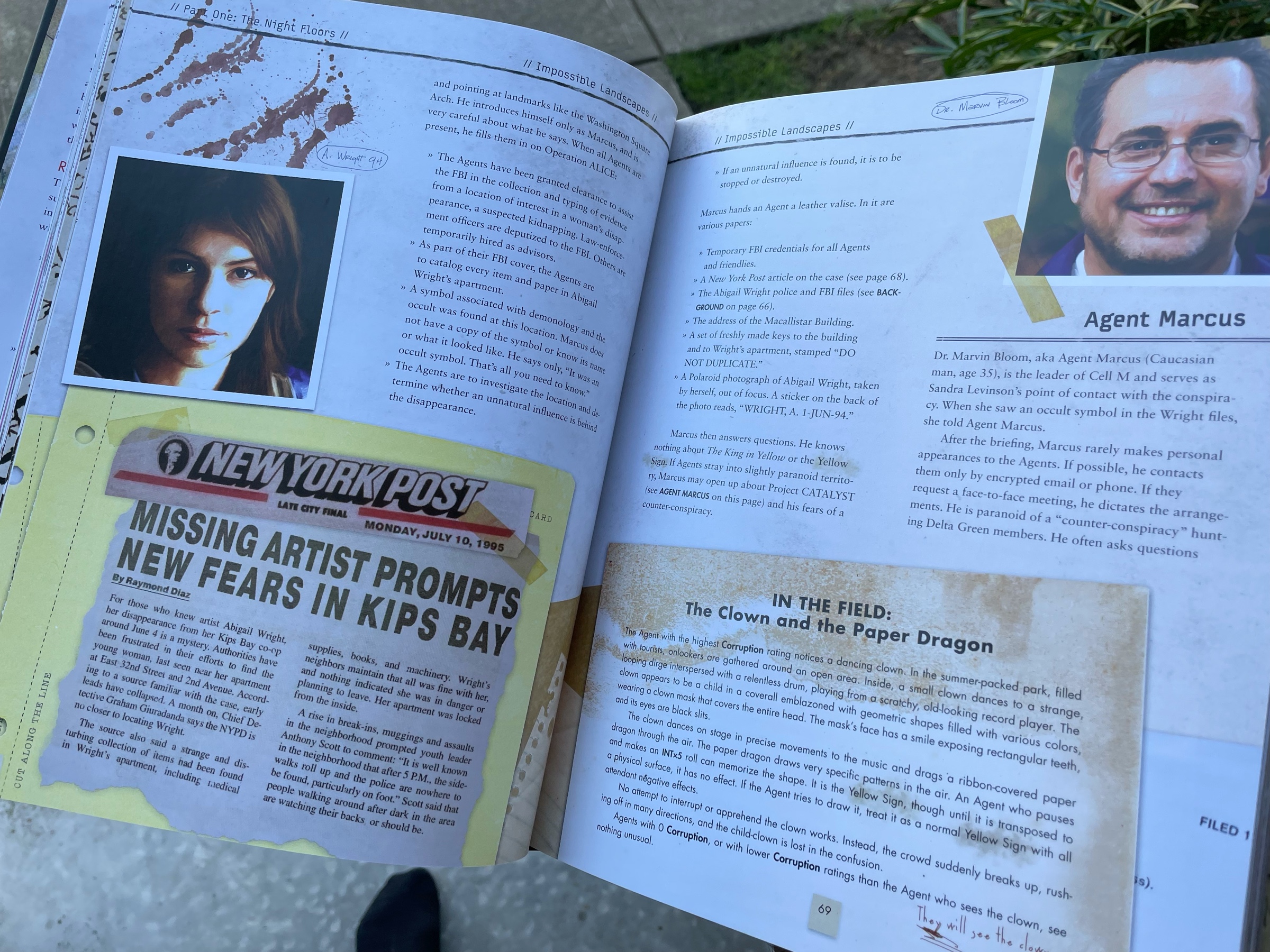

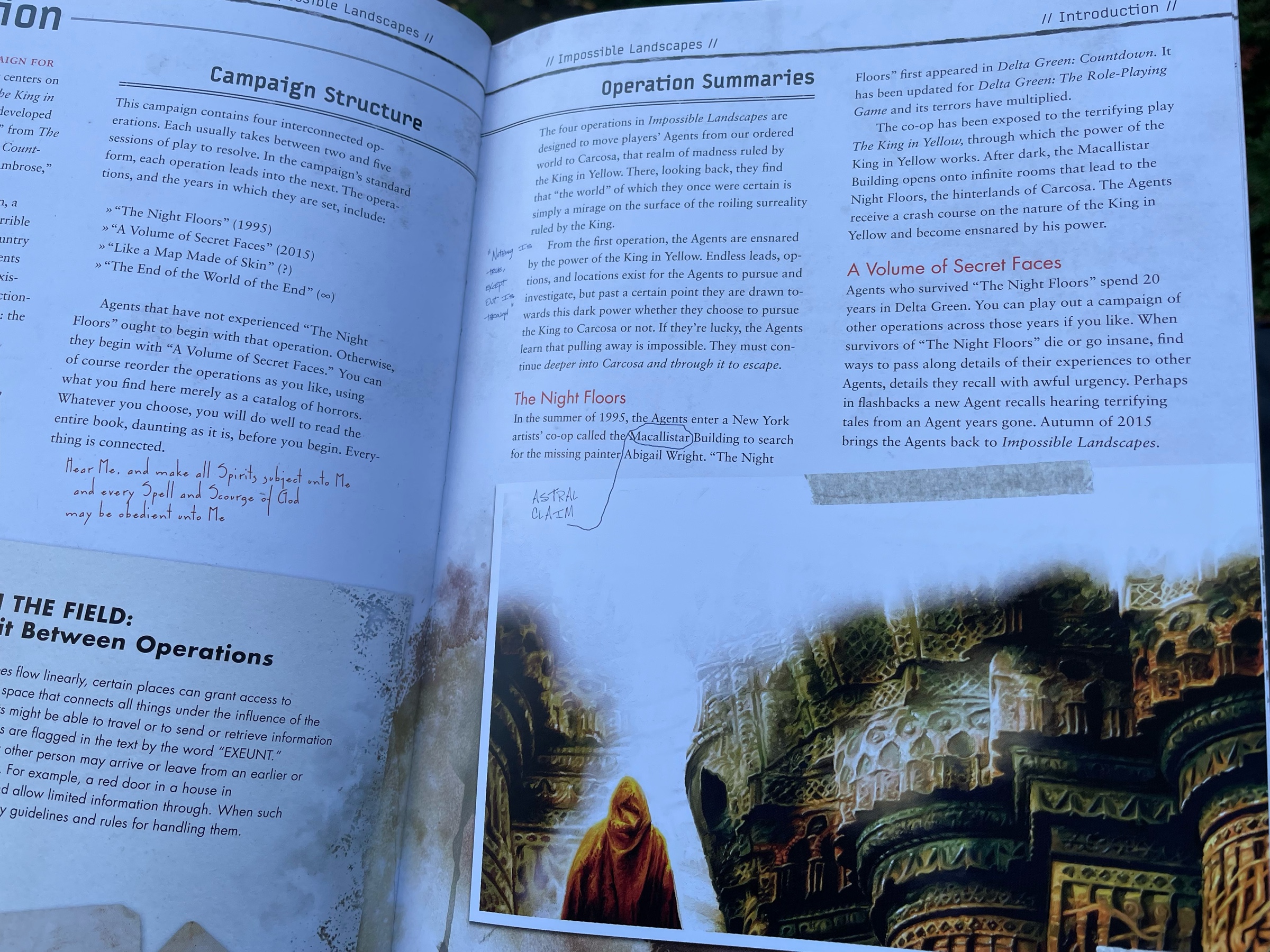

This book was left on my doorstep yesterday. I don’t remember ordering it but I had dreams about it these past few weeks. Is it my handwriting in it? Can’t be, it’s part of the printed pages. Hold on, my nose is bleeding, be right back.

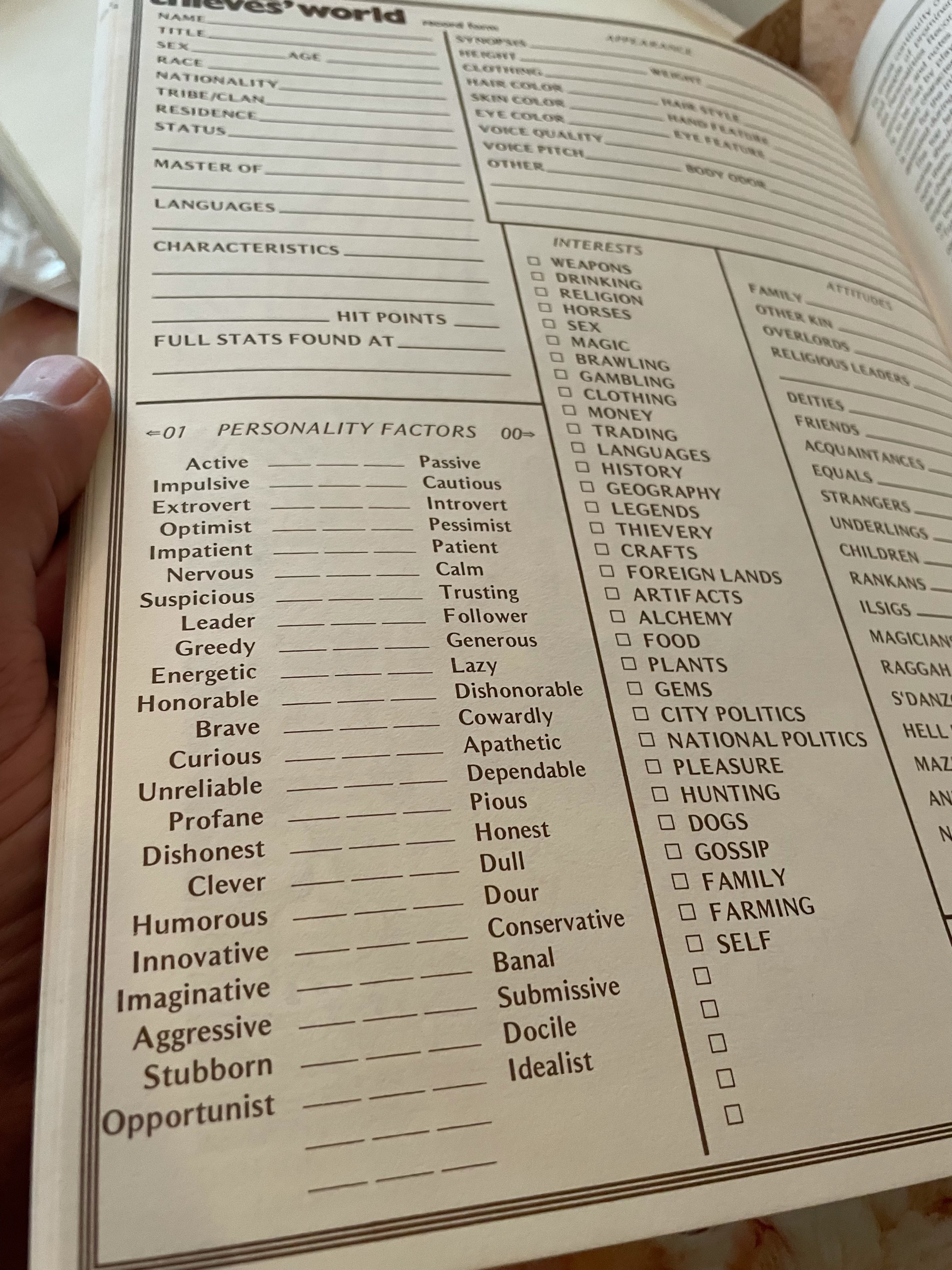

This little box in the corner of the Thieves World NPC sheet reminds me of something… 😅🤔

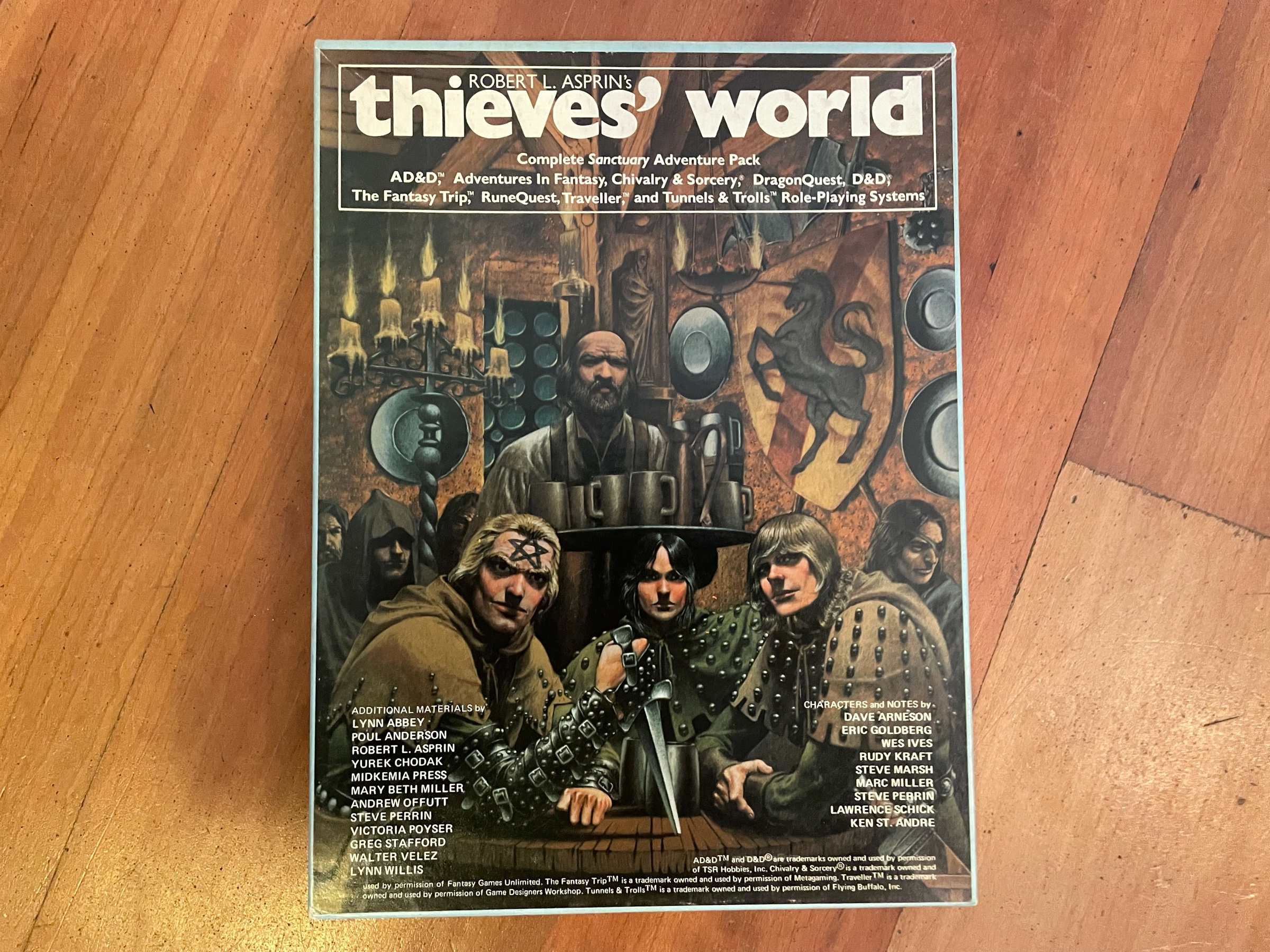

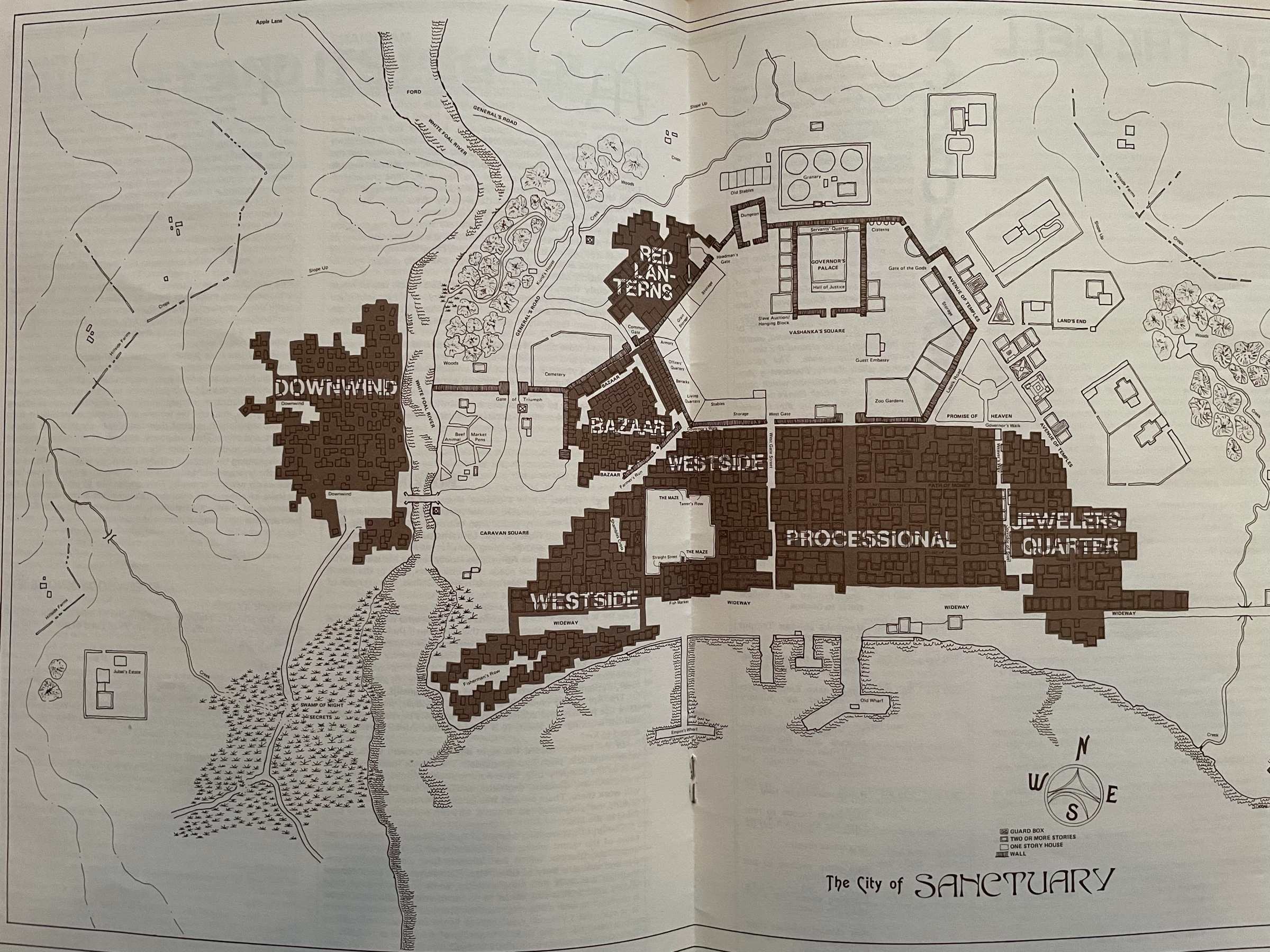

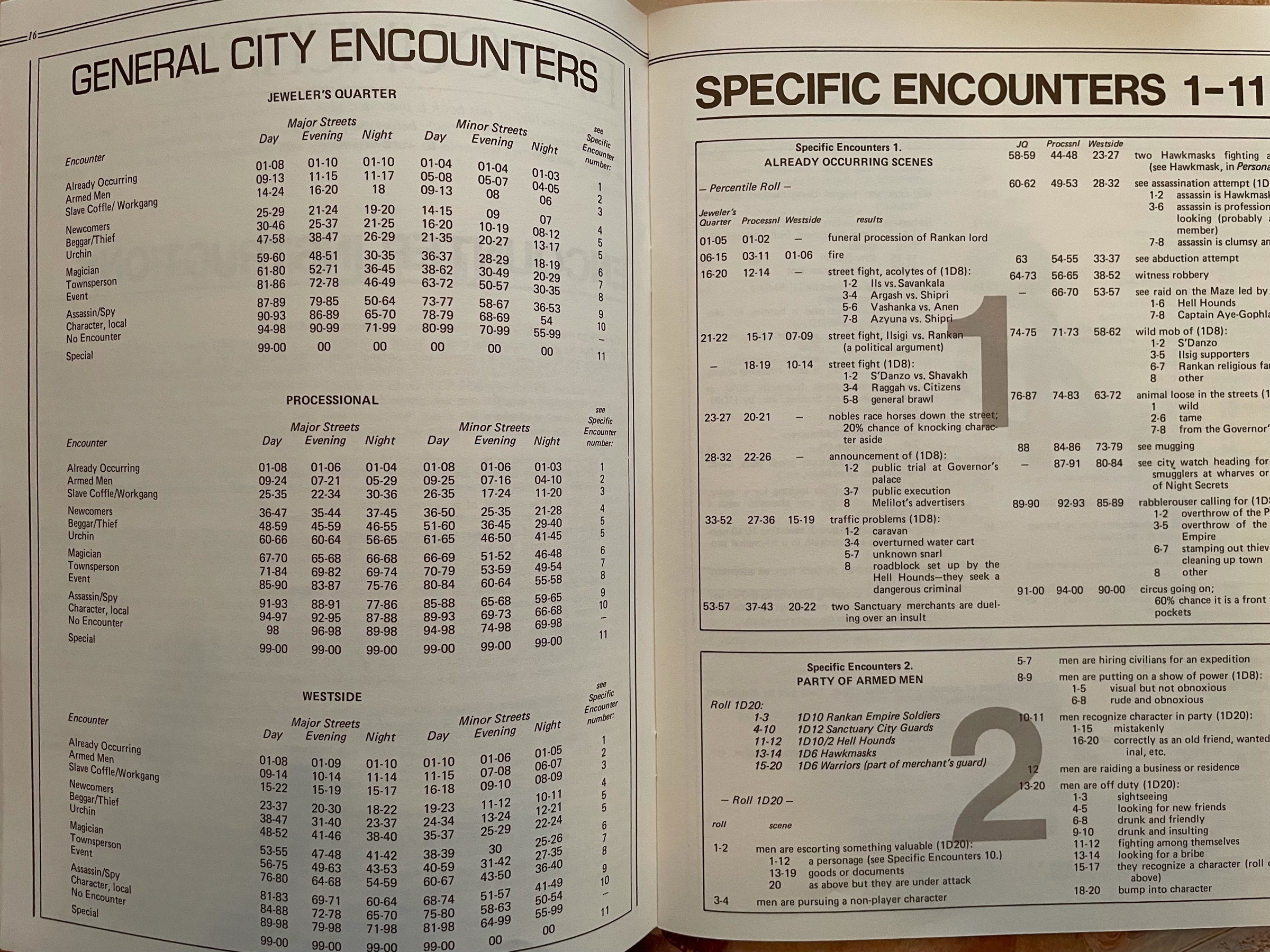

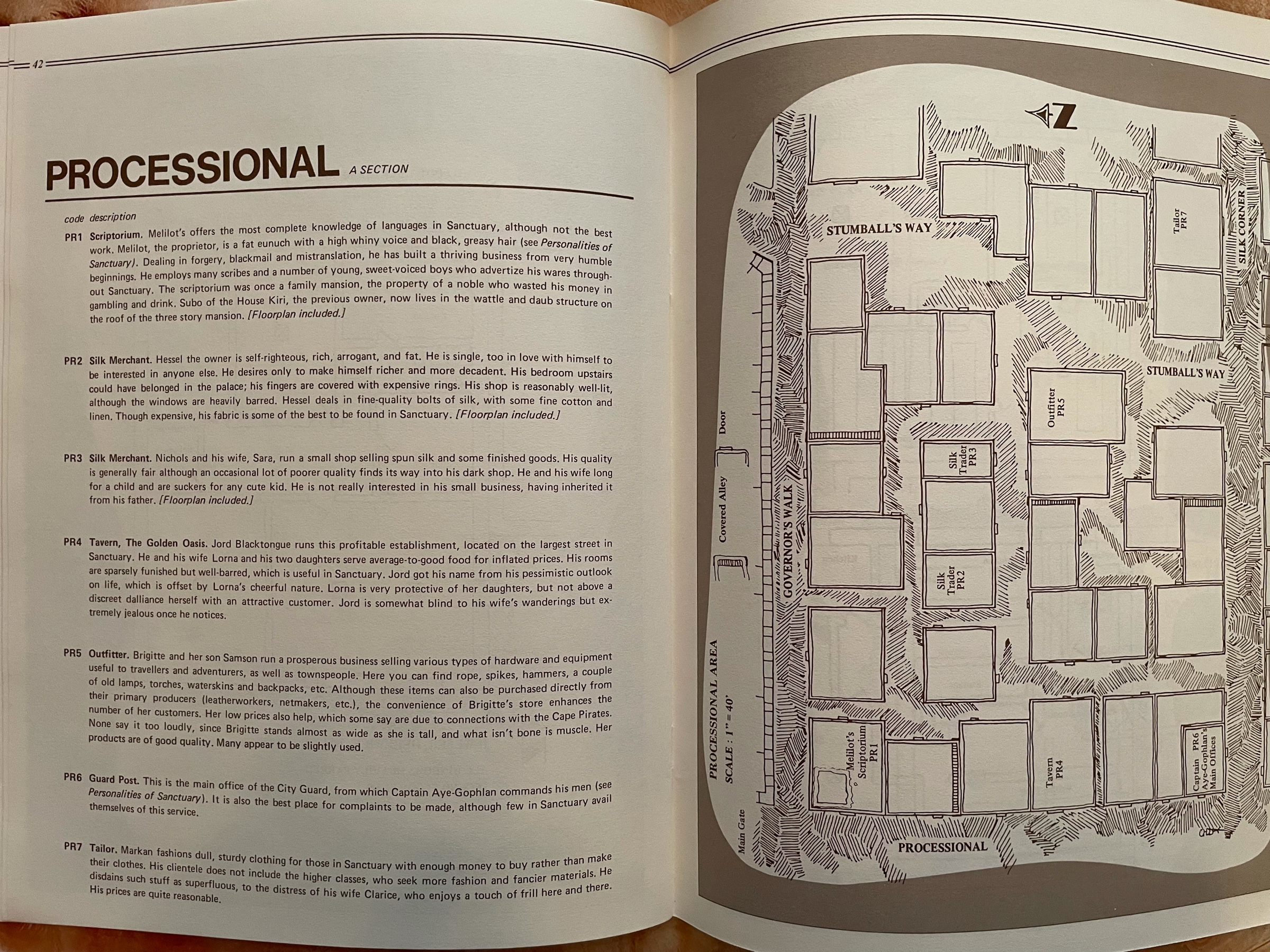

Finally got my hands on a Thieves World boxed set. I would have LOVED this as a kid. Maps and encounter tables and “street level” intrigue and NPCs… looks good!

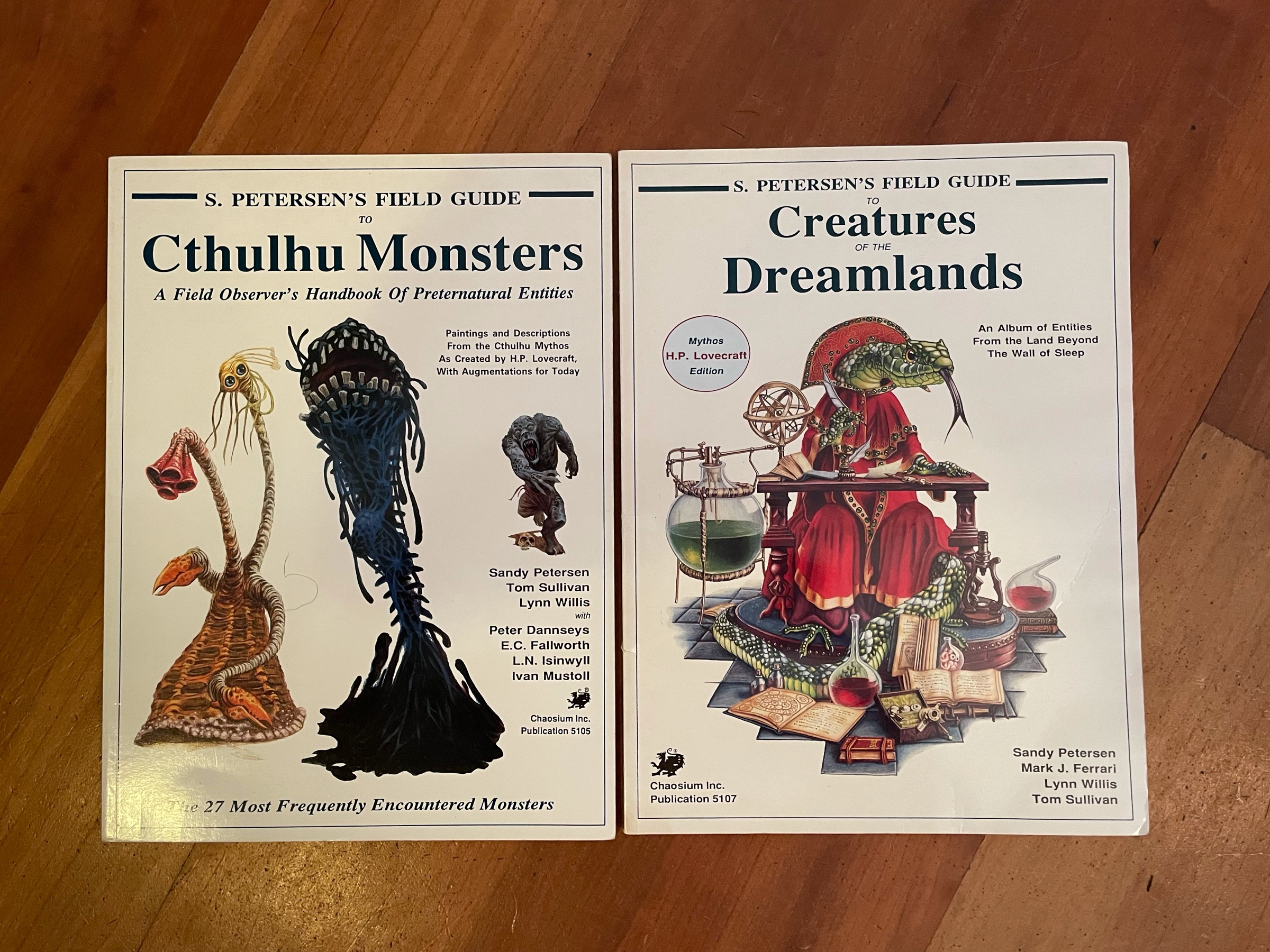

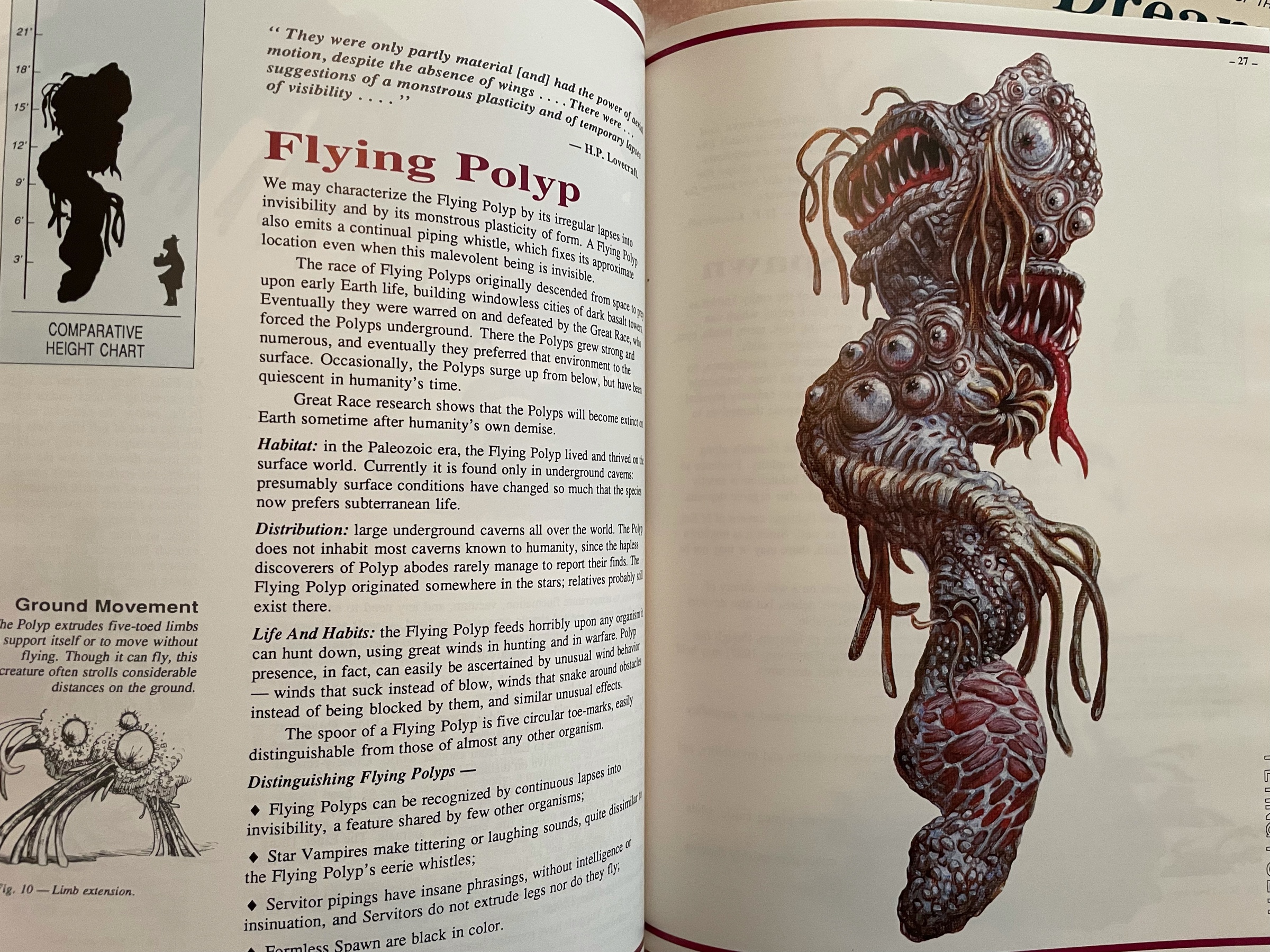

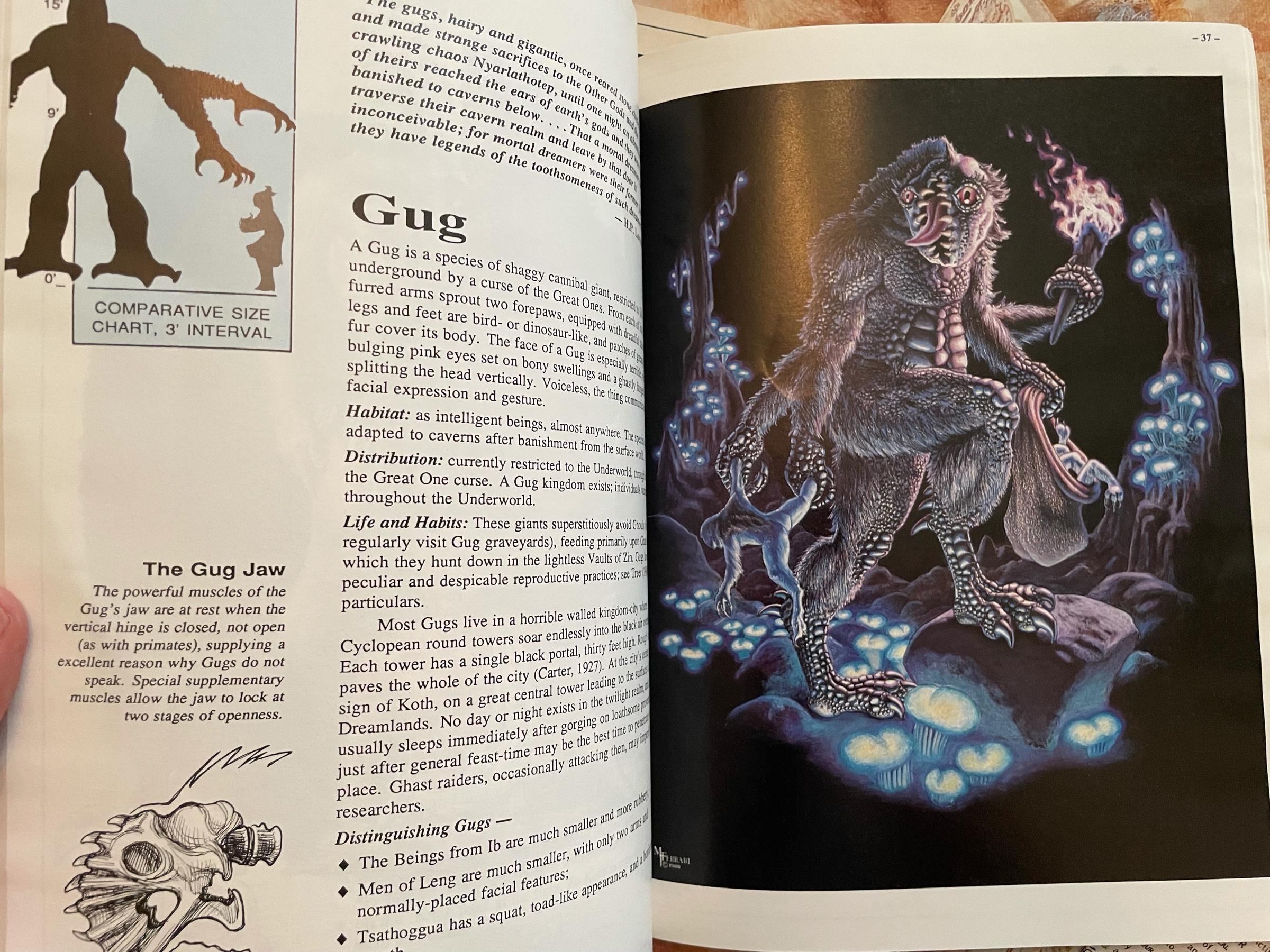

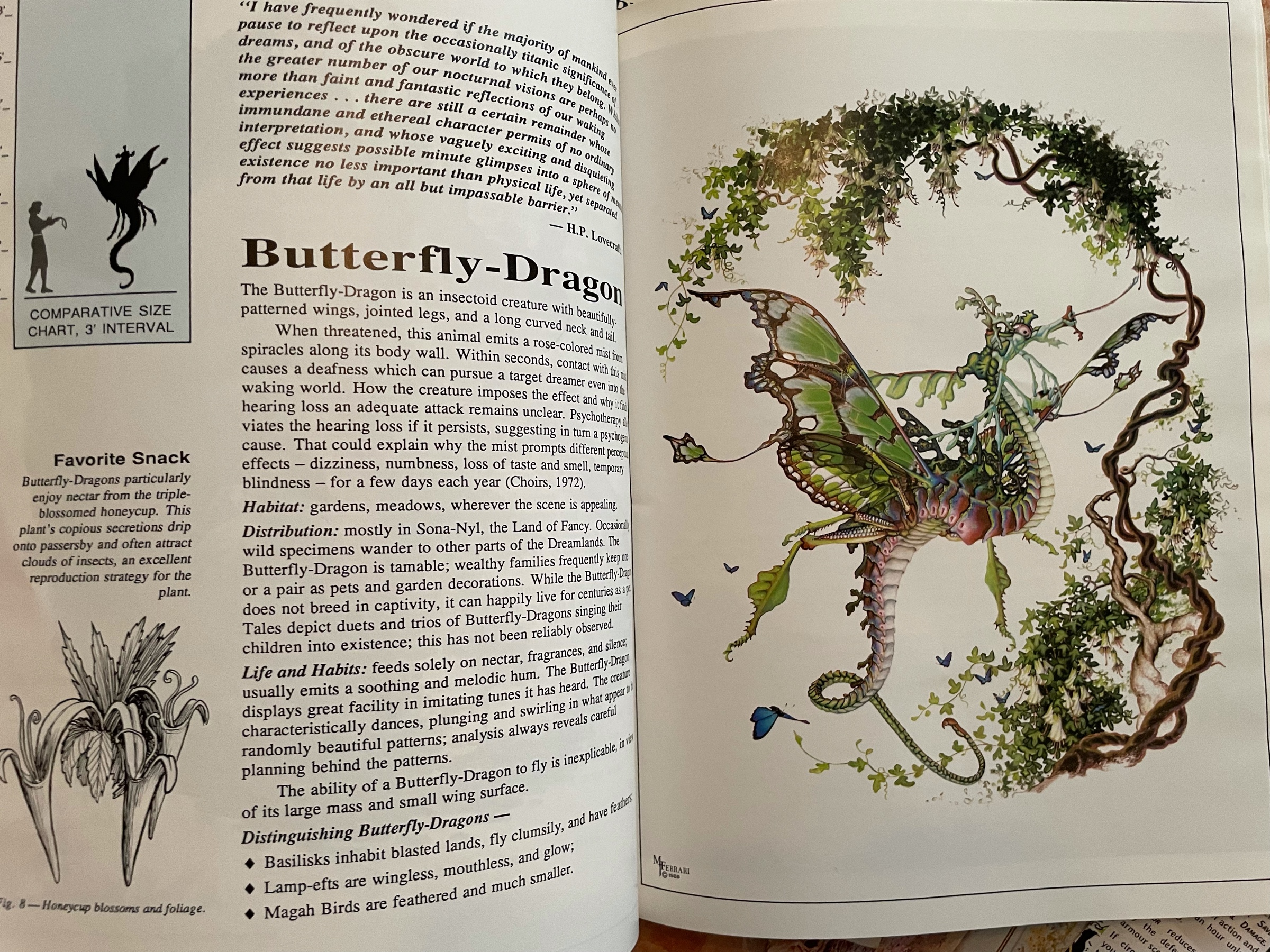

I already had the Dreamlands one in French, but I recently grabbed both in English (they had a decent price on eBay!) For a long time it was my only systemless RPG supplement and I loved how that, with its weird illustrations, set it apart!

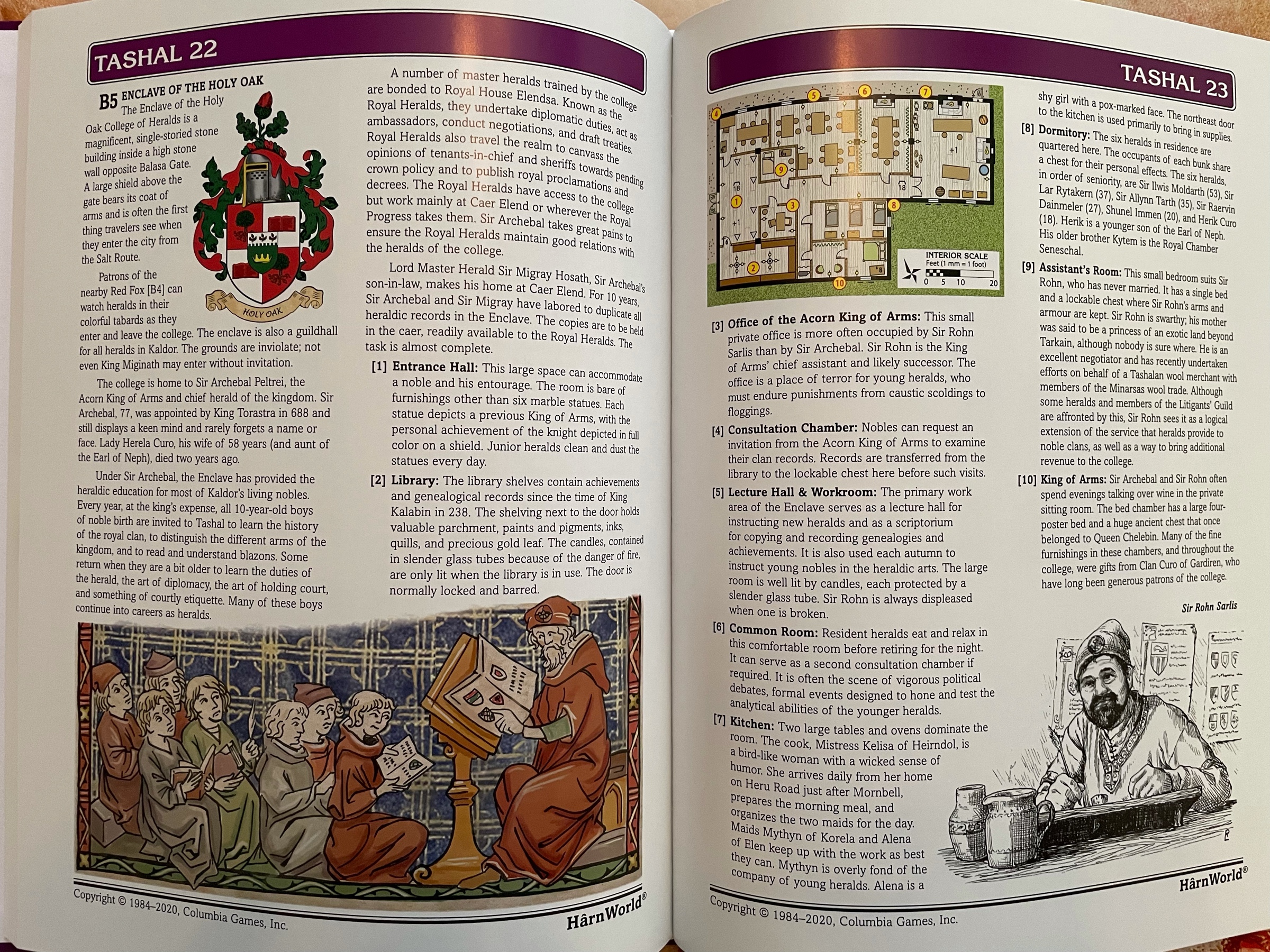

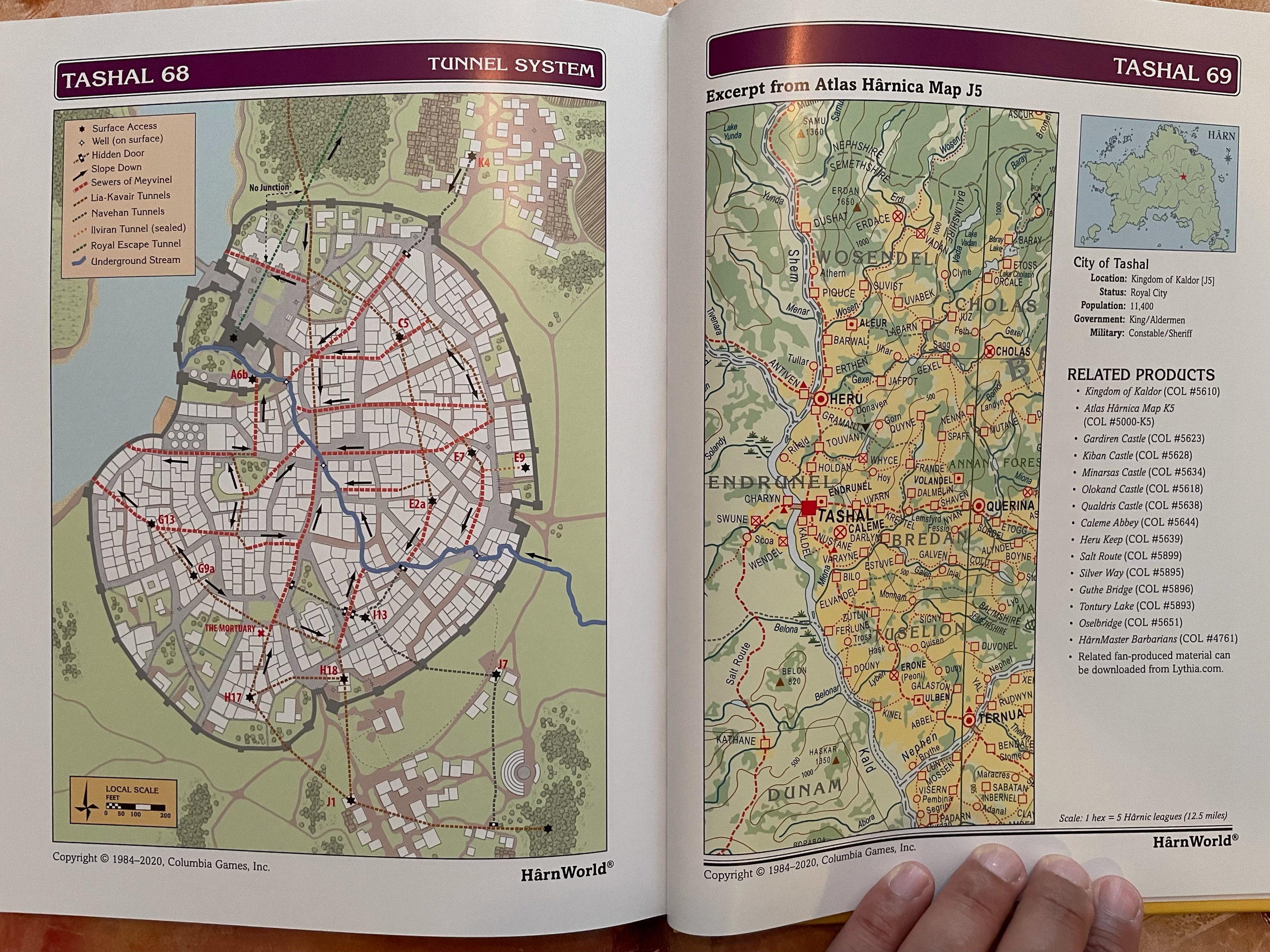

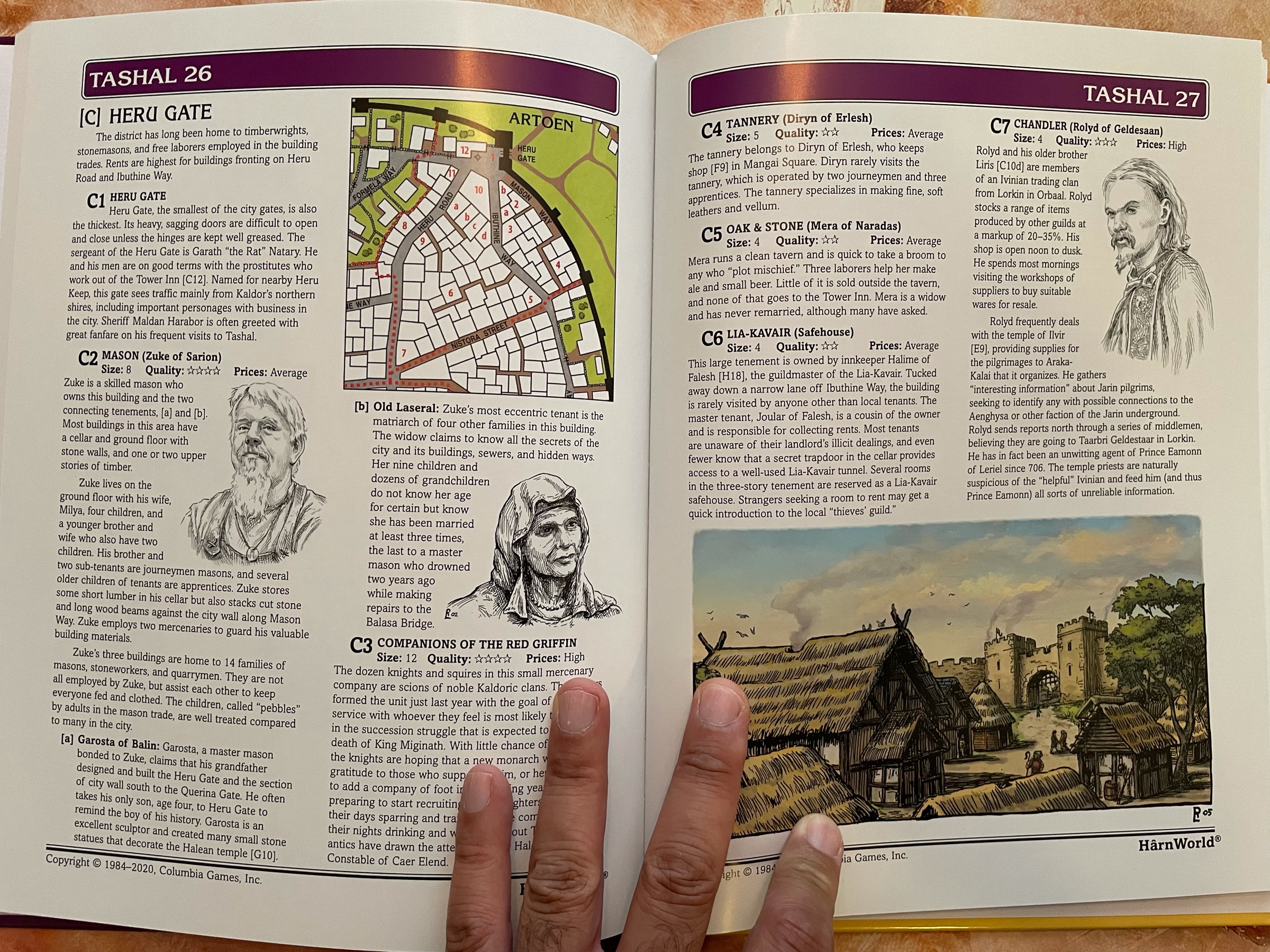

Harn’s Kingdom of Kaldor in hardback! The printing quality is top notch and the paper smells good. I love this kind of detailed material, and the maps are always awesome.

We have just watched Back To The Future 2 with the oldest kid and it still rules! I absolutely love the trilogy unconditionally. The shot where Marty comes running back in 1955 as Doc just sent him away is sooooo goood