Say hello to mama! (art by… me, inspired by true events)

Say hello to mama! (art by… me, inspired by true events)

New arrival! Death In Space #ttrpg

Good morning everyone!

My kid is singing a song about how our dog is super cute, but set to the tune of the Star Wars Imperial March. I’m a bit confused.

Time to watch this again apparently. A kid requested it…

It’s a chill jazz Chrono Trigger cover sort of afternoon here https://www.youtube.com/watch?v=pYgQEjcosP0

Canadian subway scene:

Guy1 (parking his wheelchair): hey move please

Guy2 (ignores him)

1: fucking move!

2: whatever (still ignores him)

Others: dude just move! (guy finally moves)

1: fucking immigrants

O: wtf man we are all immigrants

1: yeah sorry, my grandparents were too

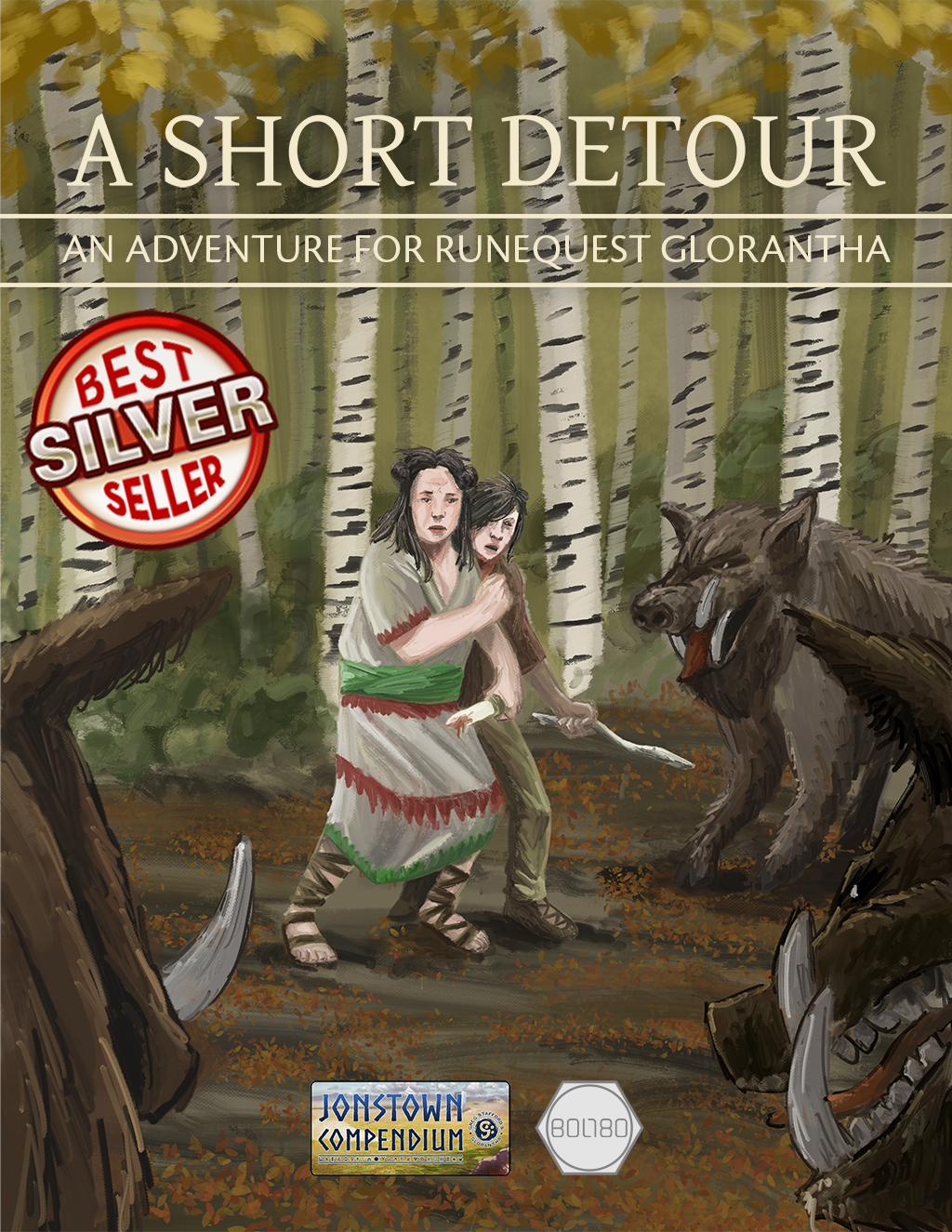

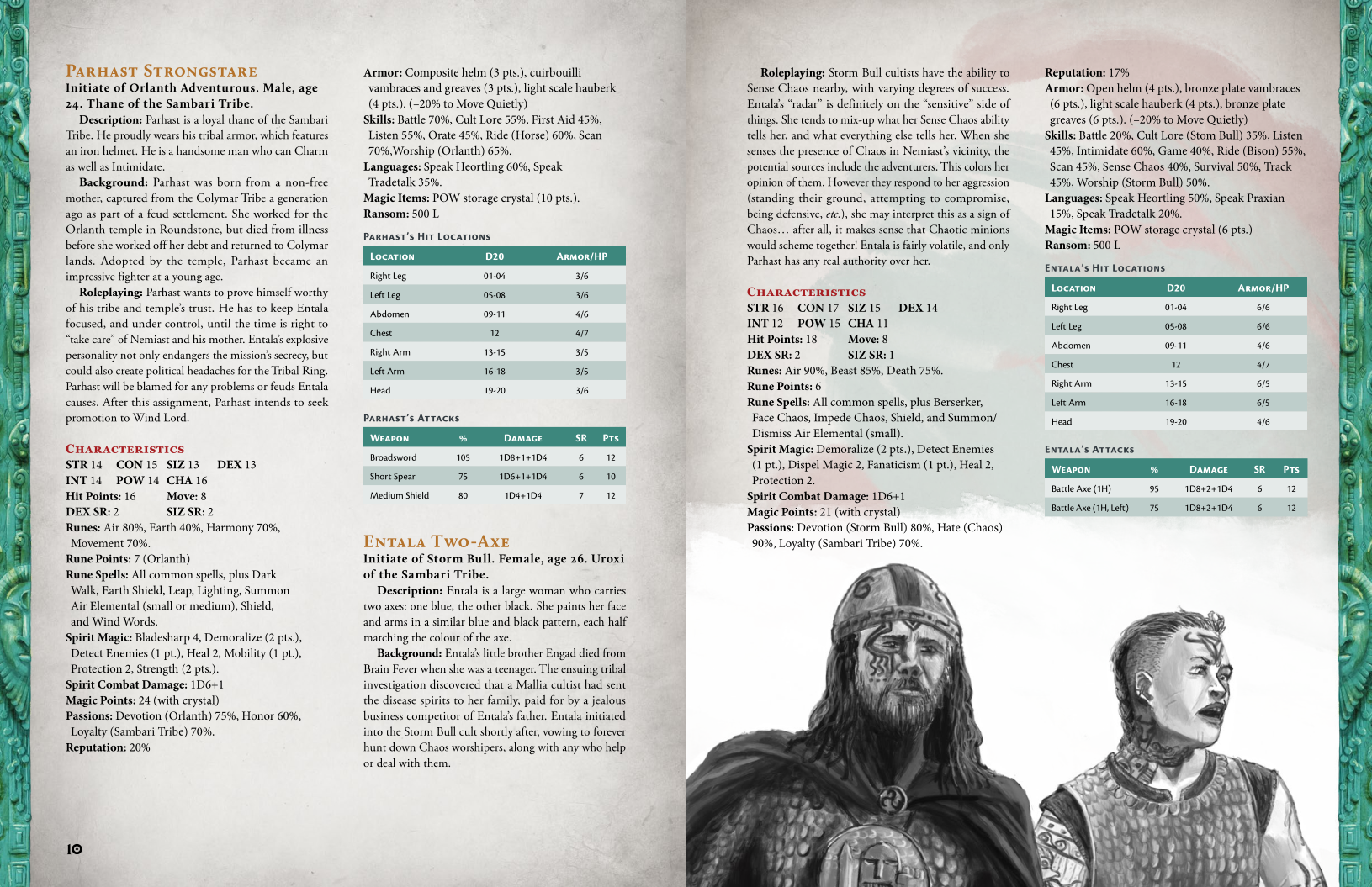

To celebrate the release of Bog Struggles, my previous RuneQuest adventure, A Short Detour (which I just realized is now Silver best seller, thank you very much) is on sale at almost 30% off! Get it now! http://tiny.cc/a-short-detour

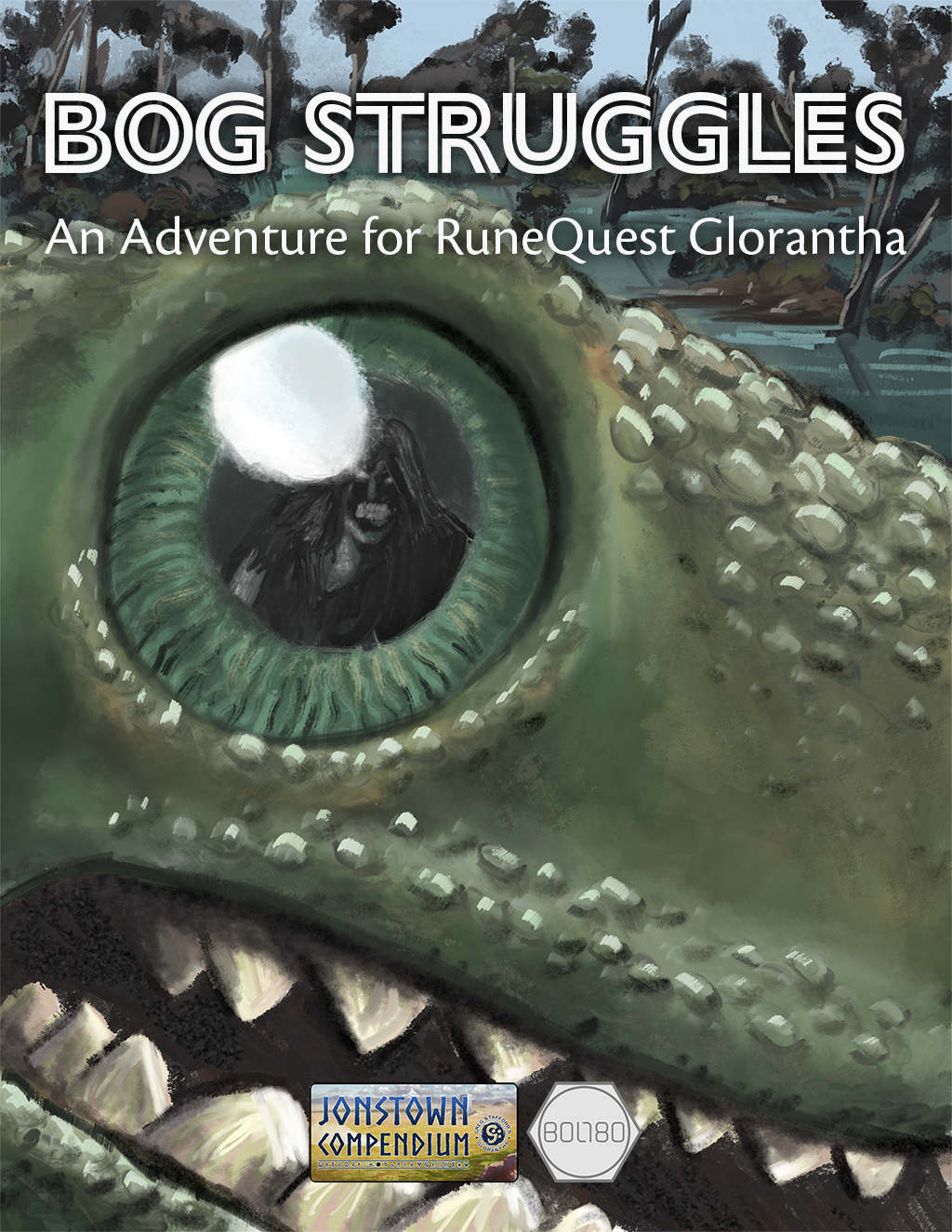

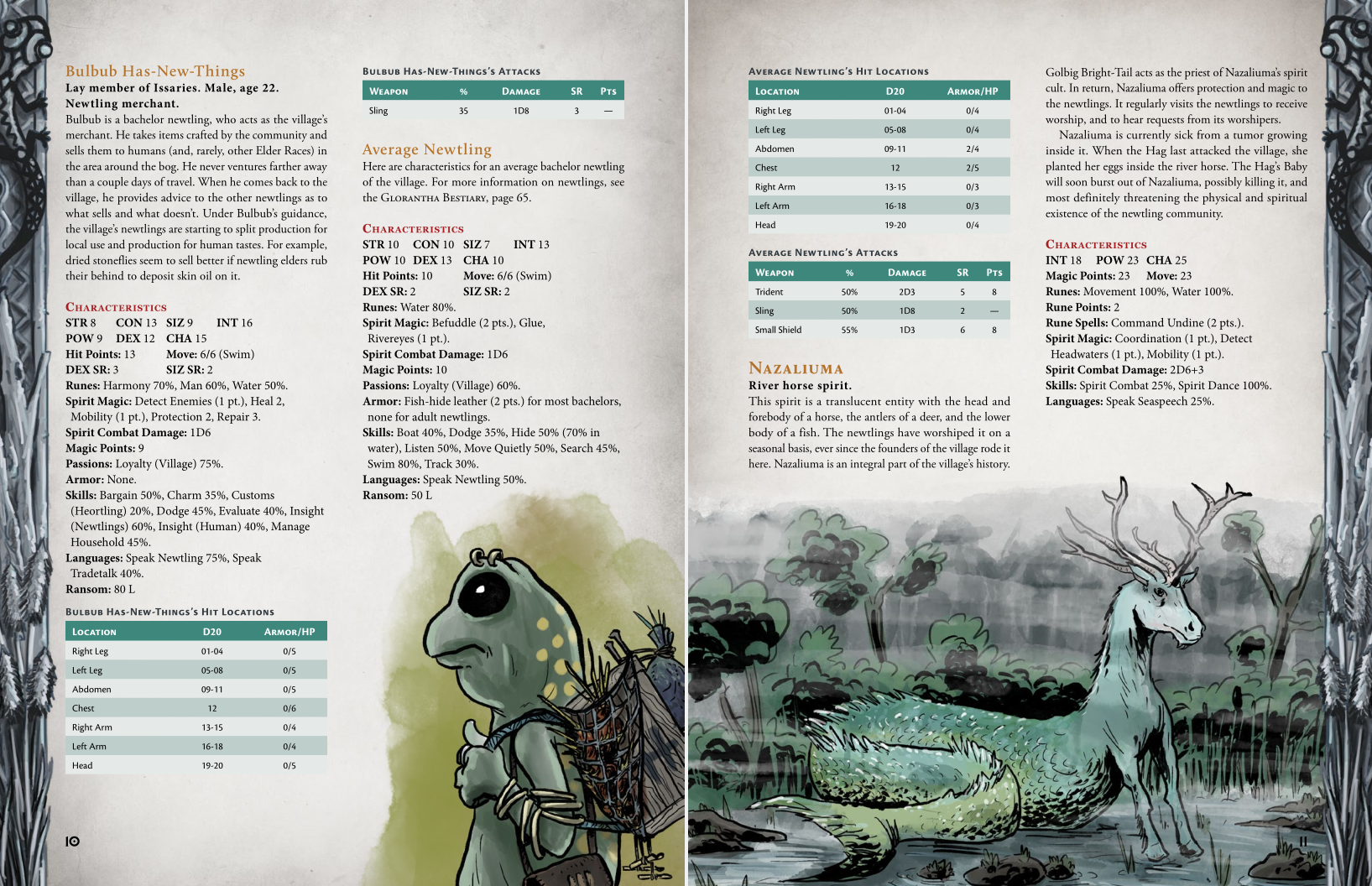

Once more for the morning crowd: my new RuneQuest adventure is out on the Jonstown Compendium! Help some newtlings fight off a horrible threat and join a water cult! http://tiny.cc/bog-struggles

My new RuneQuest adventure has already been reviewed by Pookie! Amazing! http://rlyehreviews.blogspot.com/2022/06/jonstown-jottings-62-bog-struggles.html