Another deep thought from the kids: swiper can’t stop swiping because that’s not only his name, it’s his nature.

The Stochastic Game

We showed our kids Die Hard this weekend and at the end one of them exclaimed “hey why didn’t we watch this for Christmas?!”. So, you know, that settles that debate.

Oh wow I didn’t realize that my RuneQuest Glorantha adventure now has FIVE 5-star reviews! Thanks a lot to all the reviewers! http://tiny.cc/a-short-detour

Always nice to see some eagles flying overhead while running errands in the neighborhood

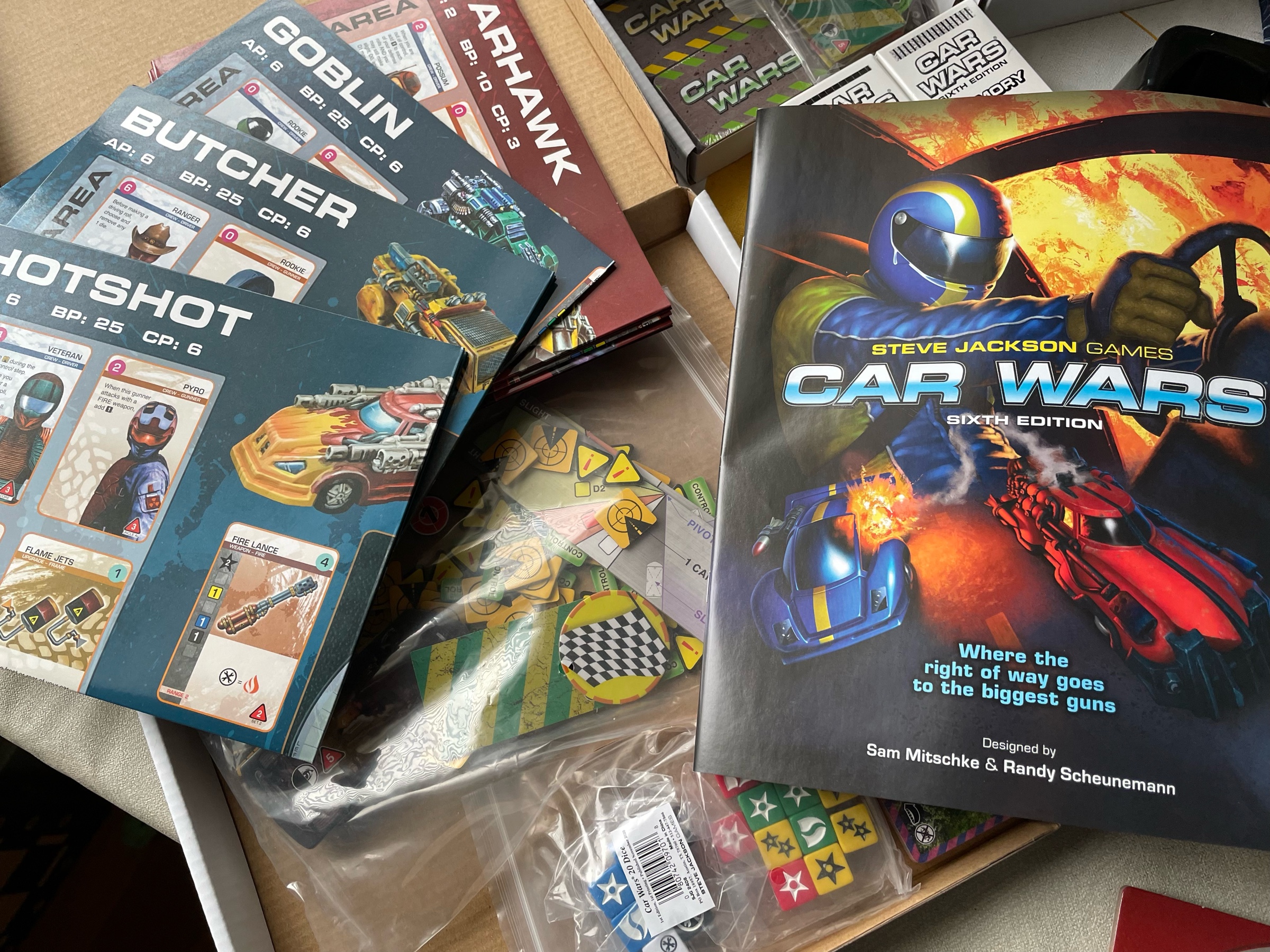

Kickstartled: when you receive a big Kickstarter package that you forgot was finally coming, and it’s much bigger than you expected. For example:

Signed up to run two games at ChaosiumCon… but now that the schedule is up, seeing all these cool game ideas makes me think my scenarios are lame! Ok time for some pick-me-up chocolate! 🍫😋

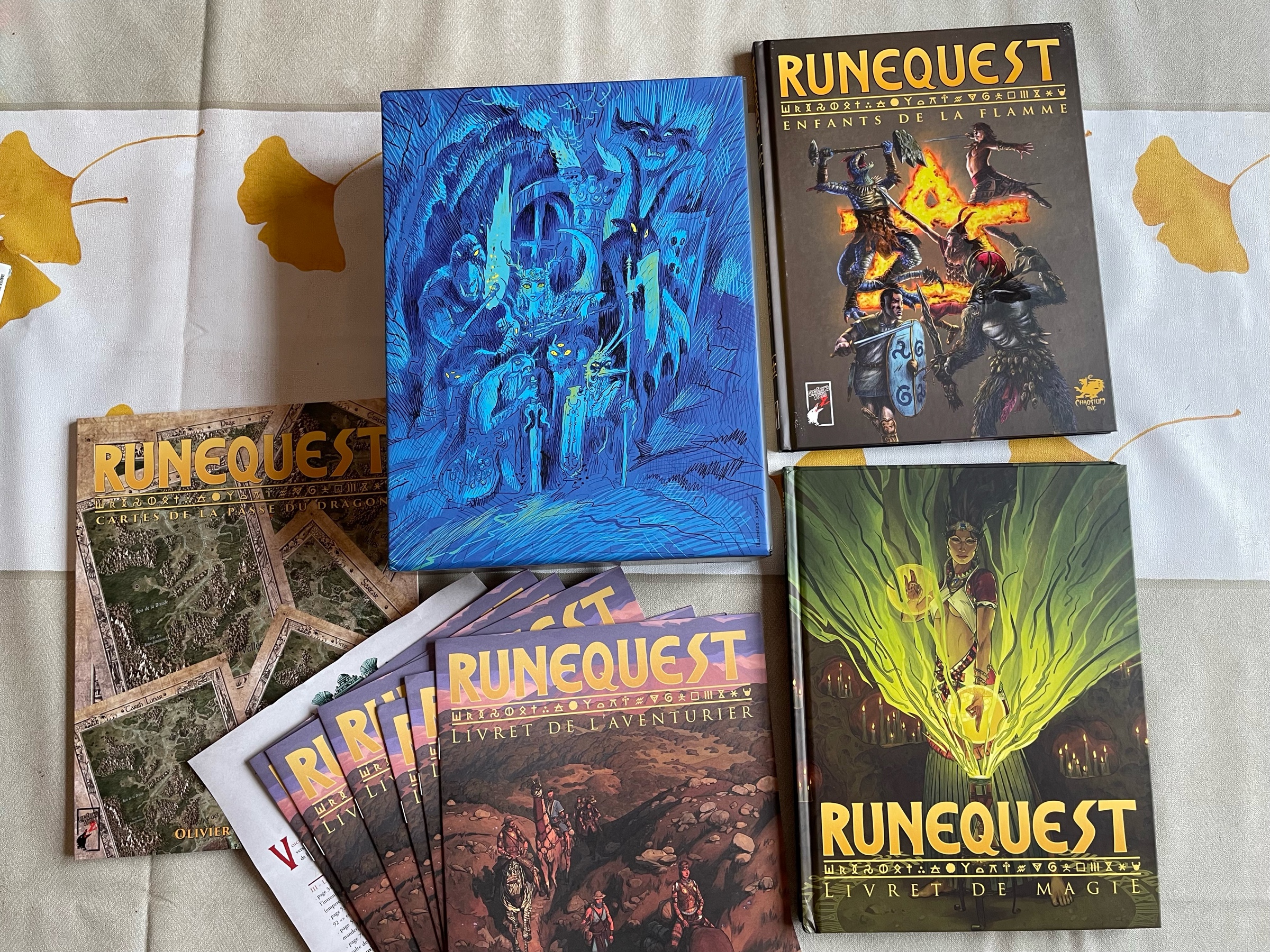

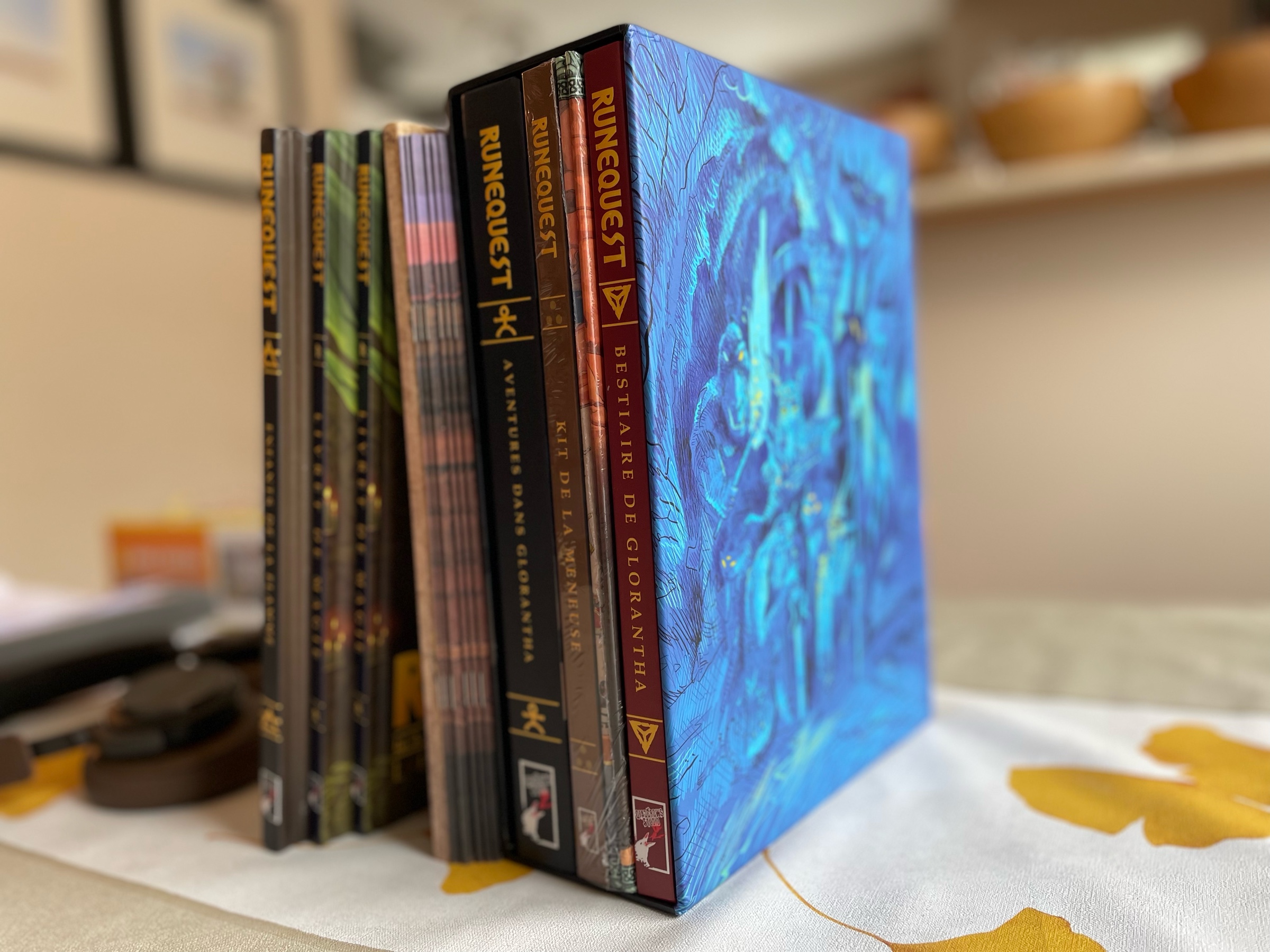

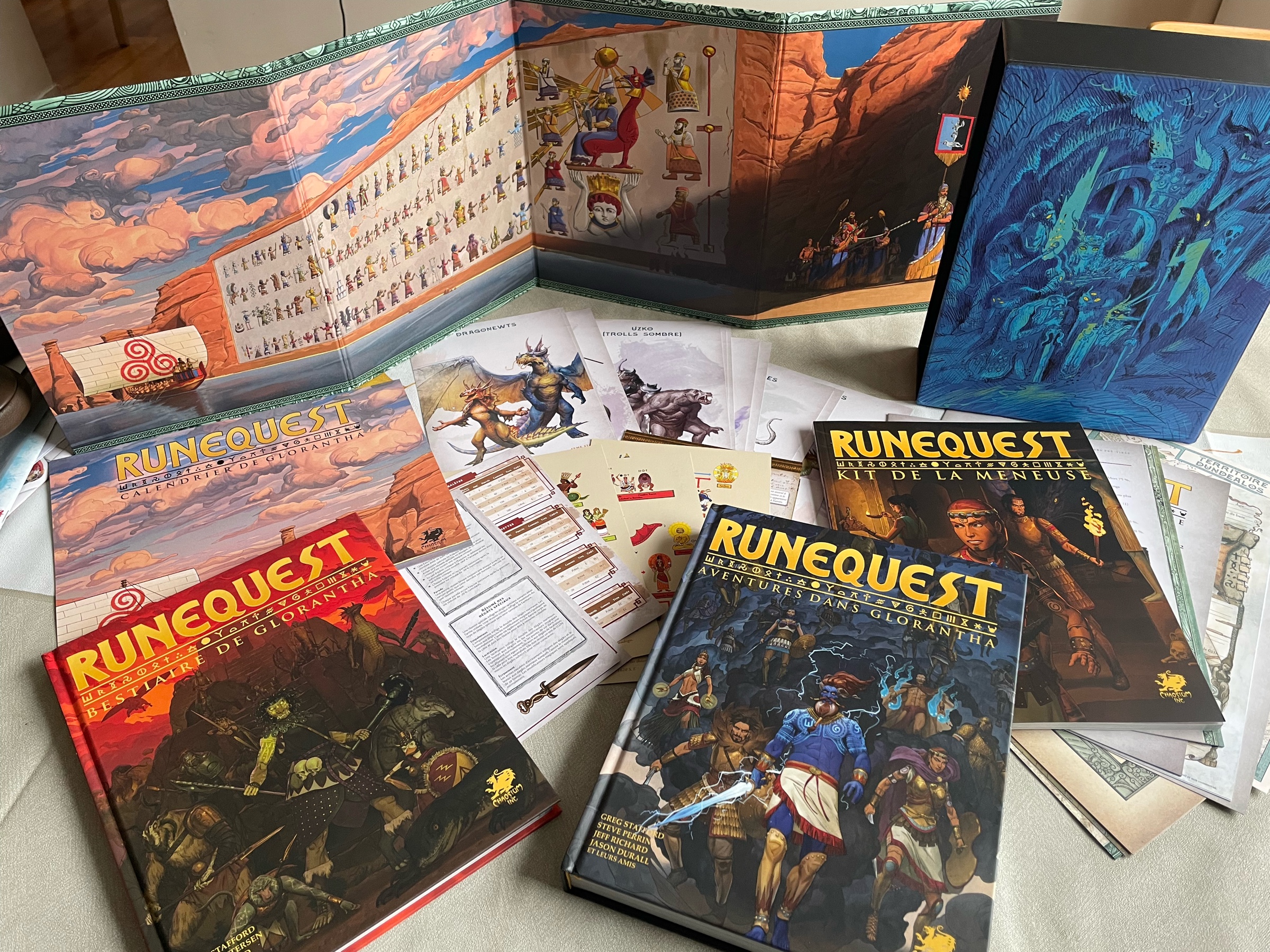

I received my French RuneQuest Glorantha books! Lots of cool exclusive and tweaked stuff in there (but note that only the Dundealos campaign book is new material). Congrats and thanks to Studio Deadcrows!

We watched Holy Grail with the kids (10 and 12) two weeks ago, and one of them is still quoting it randomly almost daily. So I guess I proved that annoying Monty Python referencing occurs naturally in 50% of viewers. Thank you for coming to my TED talk.

I really need to figure out a good process for going from sketch to painting. I’m just fumbling around with brushes and screaming in my head until the piece looks half as good as the sketch was.

The most fun stuff about Dreamlands pirate boats are the sea shantaks