Deep thought of the day from the kid: “if Santa really existed, it would actually be less magical”

The Stochastic Game

Ramblings of General Geekery

This is great: GM commentary by Gareth Hanrahan on a 13th Age one-shot. I recognize a lot of thought process in there! https://pelgranepress.com

“What would a Chromium-only Web look like?” https://www.mnot.net

Return to Monkey Island! There’s a whoooole bunch of callbacks to the first two games here… https://www.youtube.com

Cloud appreciation post! This is what stock photos and sky dome textures are made of!

Good morning!

Good morning! It’s way too hot already, I didn’t come to Canada for the sun and the heat 😡😅

Showtime + BBQ soon!

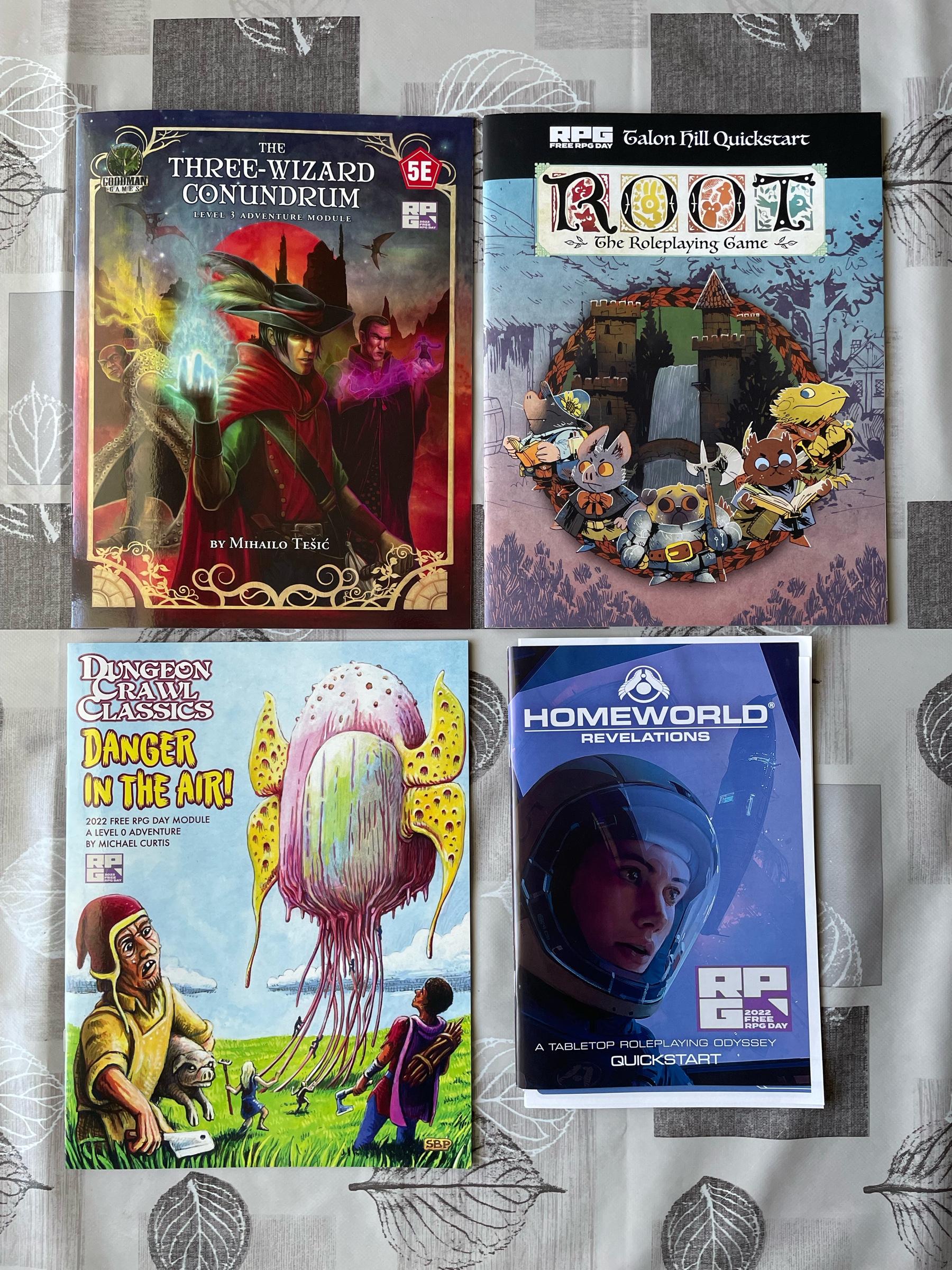

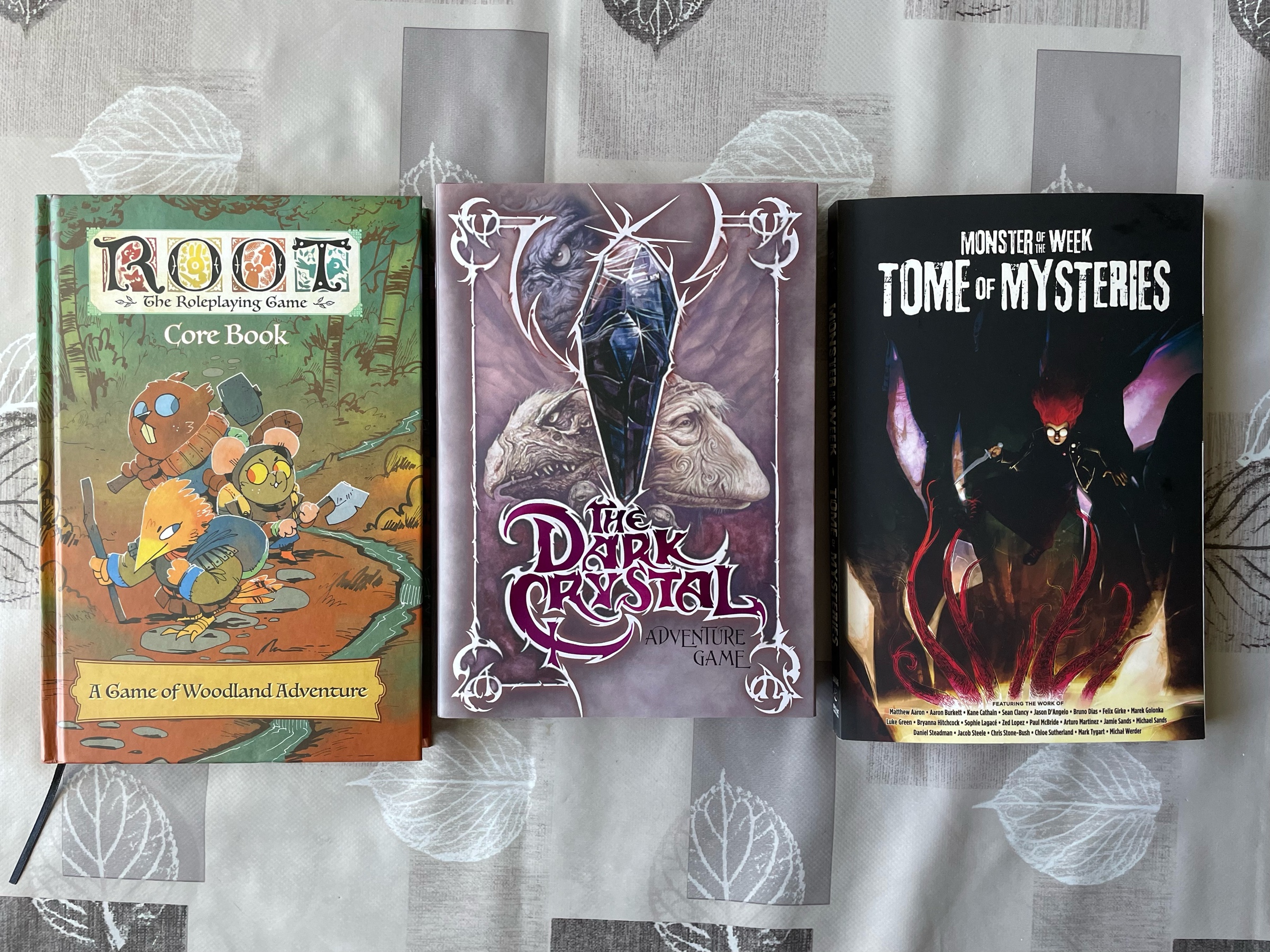

Happy free (and non-free 😋) RPG day!

Good morning! I just wanted some ice cream and ended up wandering into a dragon boat festival thing. Thankfully I escaped. With ice cream.